Everyone wants to buy products from leading e-commerce websites like Amazon at affordable prices. Many buyers often check the prices of required products on Amazon to buy them when they are available at an affordable price.

However, it is challenging to keep checking product prices daily on Amazon, as it is hard to know when the price will drop. There are a few price monitoring tools available online. However, they are costly, and you may not customize them properly.

In this blog, let's explore how to develop a customized Amazon price monitoring tool using Python and e-commerce web scraping.

What is the Process For Building Amazon Price Tracker?

- We will develop a primary ecommerce web scraping tool using Python programming to create a master file with product URL, name, and prices.

- Then, we will develop another Amazon price scraper with advanced features to track product prices with hourly frequency and compare them with the master file. The comparison will find a price drop for specific Amazon products.

- Our team expects at least one Amazon product from the selected list will come at a dropped price. Our algorithm will send a notification whenever there is a price drop for any product with a percentage.

How to Develop an Amazon Web Scraping Tool for Price Monitoring Using Python?

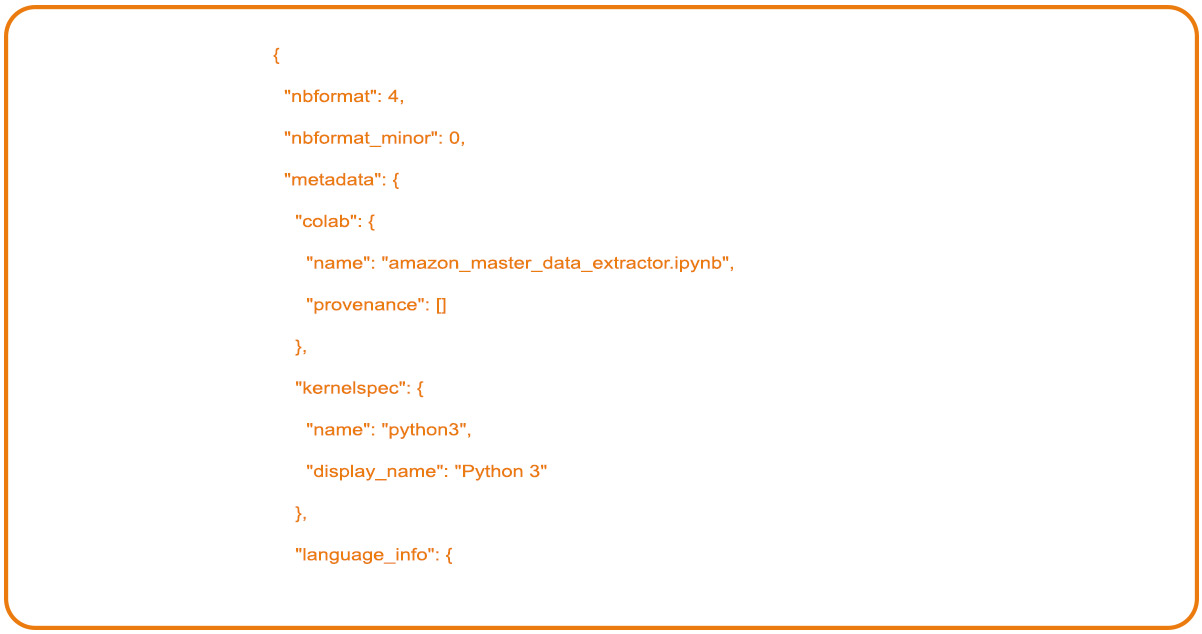

Firstly, we will gather all the required attributes to scrape Amazon pricing data. We will use BeautifulSoup, requests, and lxml Python libraries to develop the master list. We will write the data with CSV library support.

For this process, we will only extract product names and prices from Amazon pages to add them to the master list. Remember that we will scrape the selling price, not the product listing price.

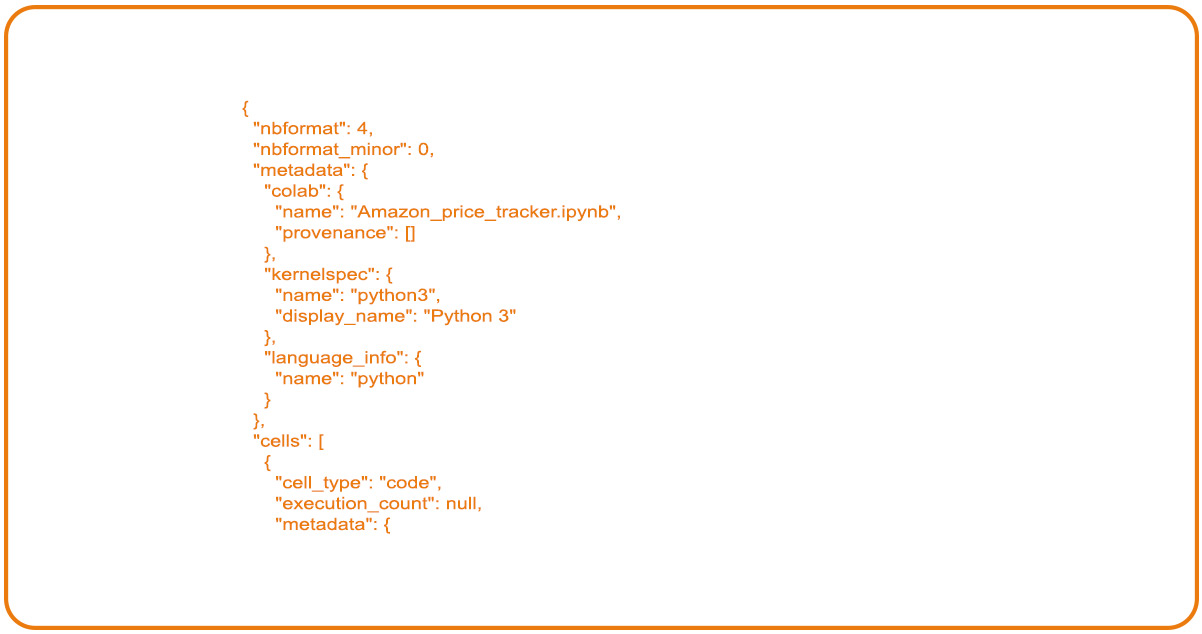

Before writing a code, we will import the required Python libraries:

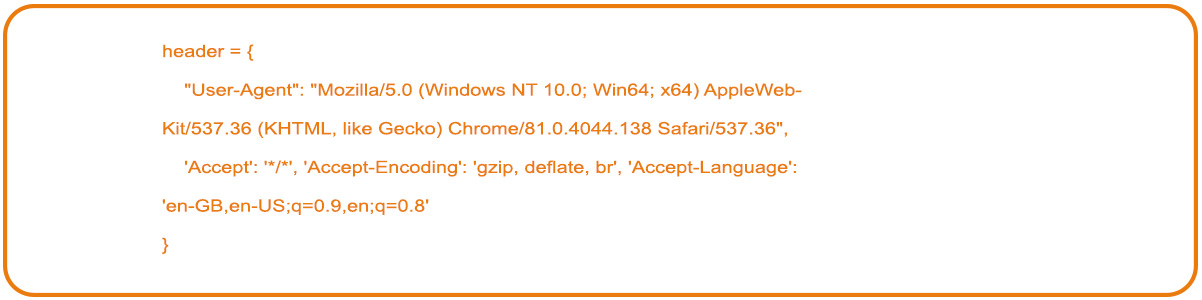

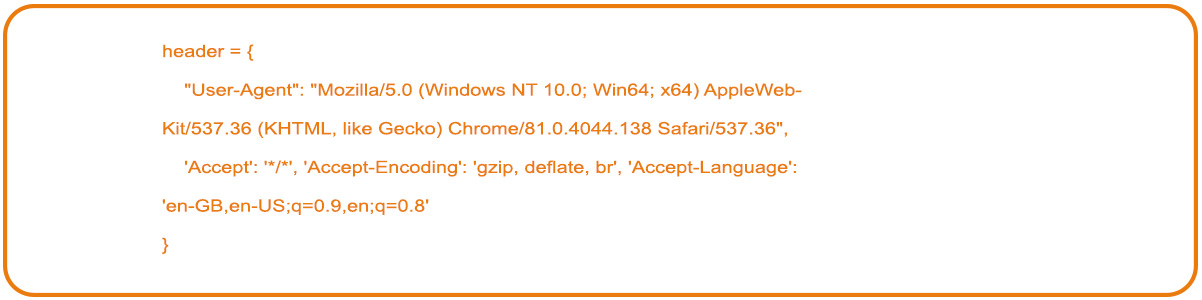

Add Header In the Code

The e-commerce platforms like Amazon don't allow web scraping bots or programs to scrape data automatically. It has its privacy policy anti-scraping algorithm that detects and blocks web scraping tools. The best step to eliminate this anti-scraping algorithm is to add a proper header in the program.

Header sections are crucial in each HTTP request because it gives valuable metadata related to coming requests on the source platform. We performed a header inspection with the help of Postman and added the header that you can see below.

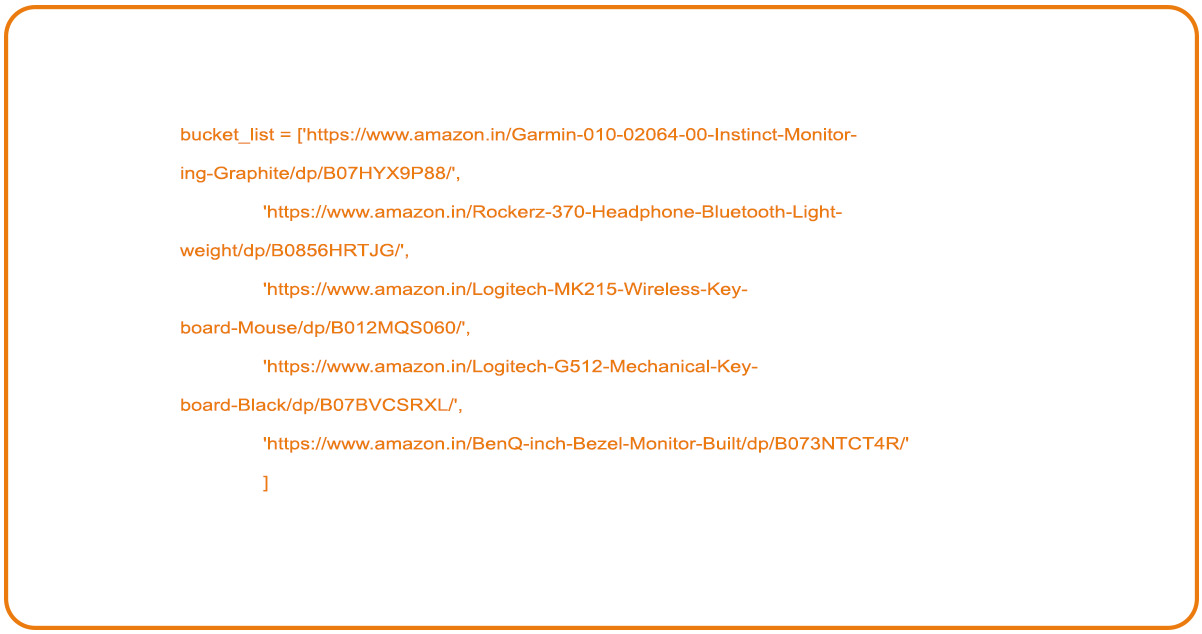

Product List Development

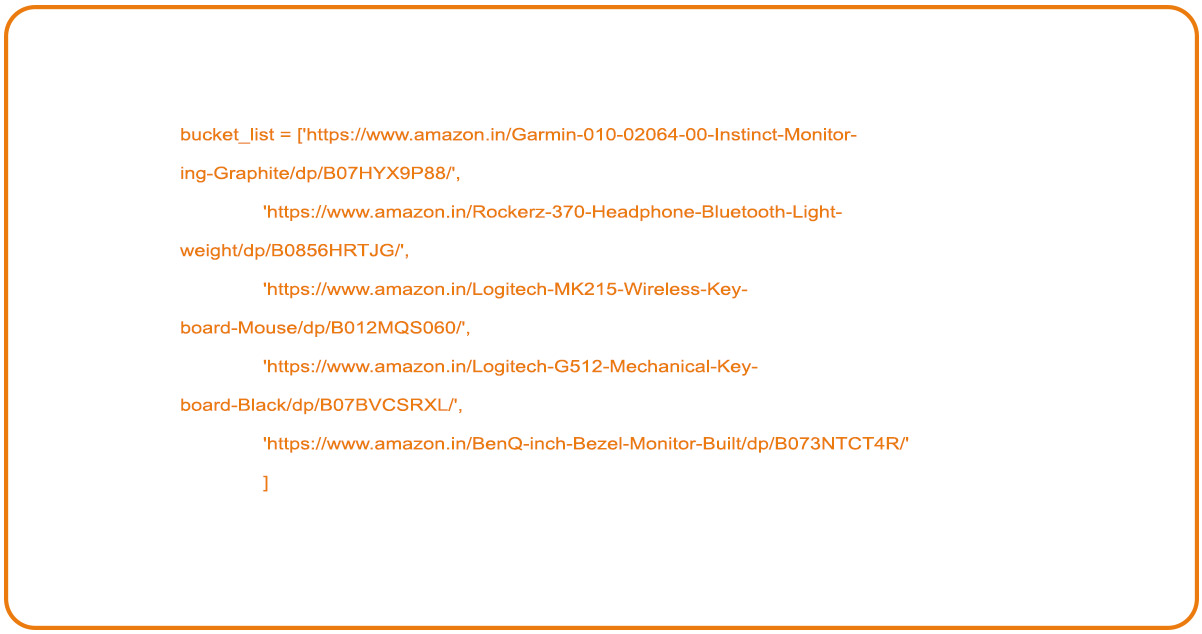

The next step in building the Amazon data scraping tool to track prices is to create a product list. Here, we have added five products to our program as a bucket list. After adding it to the text file, you can use a Python program to read the bucket list and start the data processing. For minor requirements, a product list using Python is sufficient. However, having a product list file is a great choice to scrape more prices.

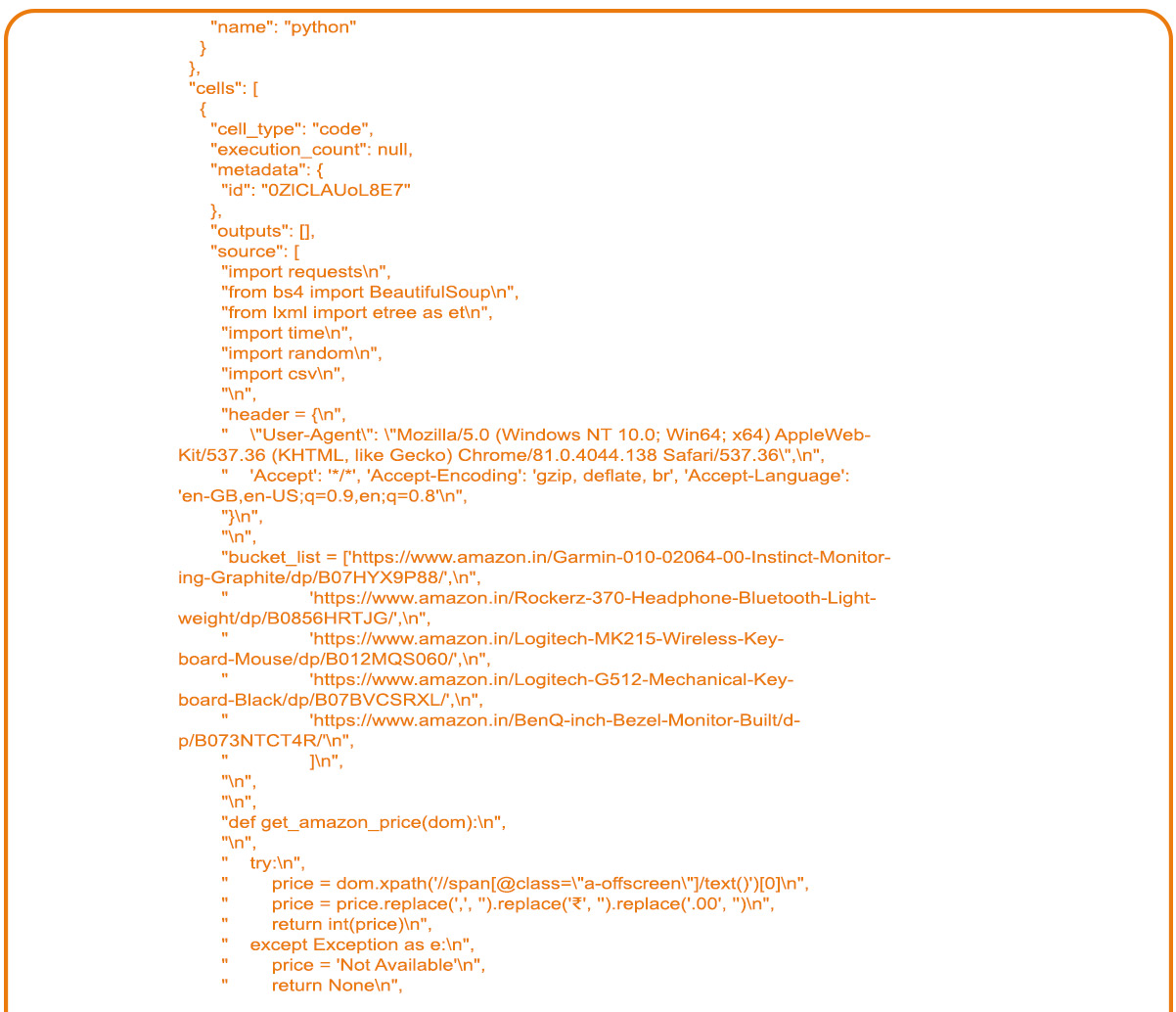

We have already mentioned we only monitor product names and prices from Amazon.

Scrape Amazon Product Names and Prices

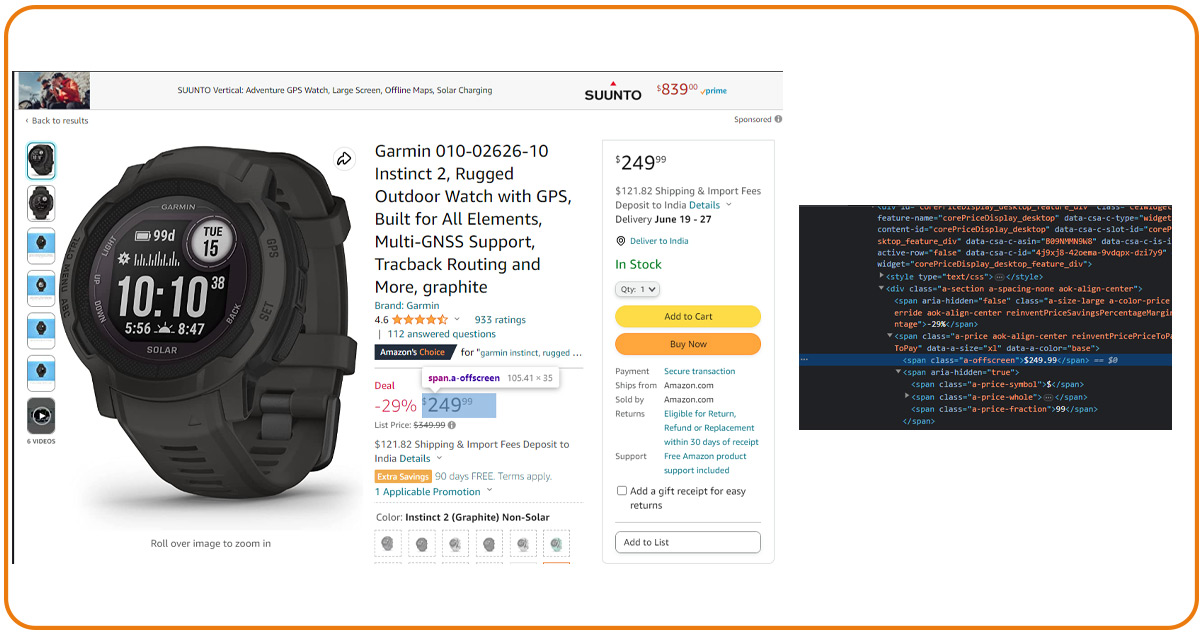

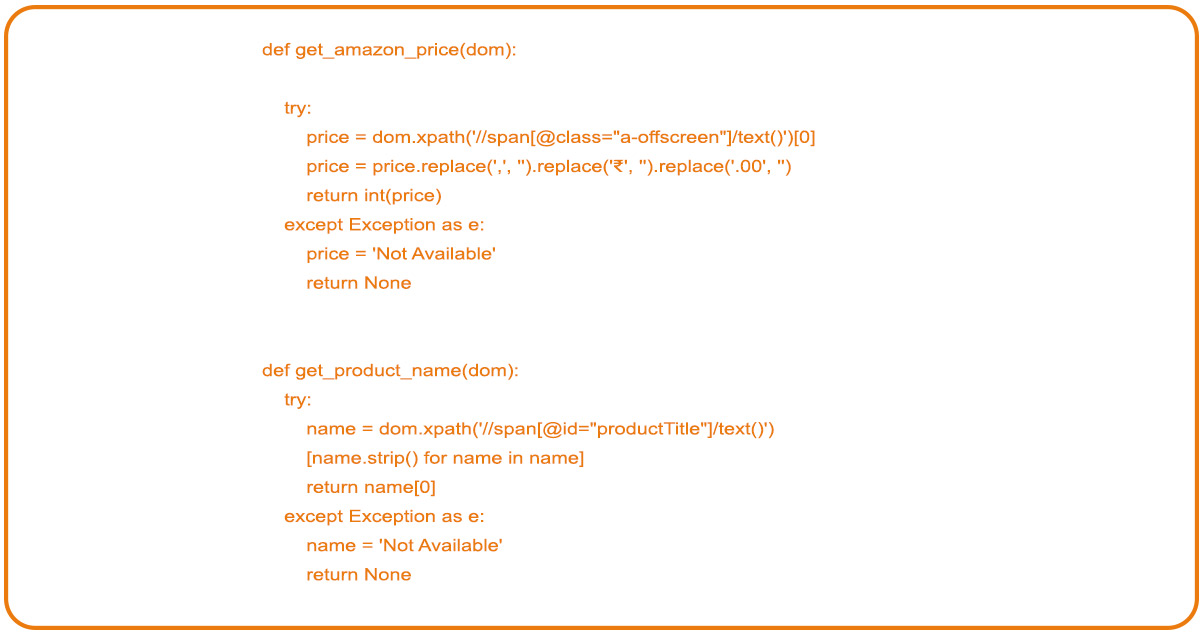

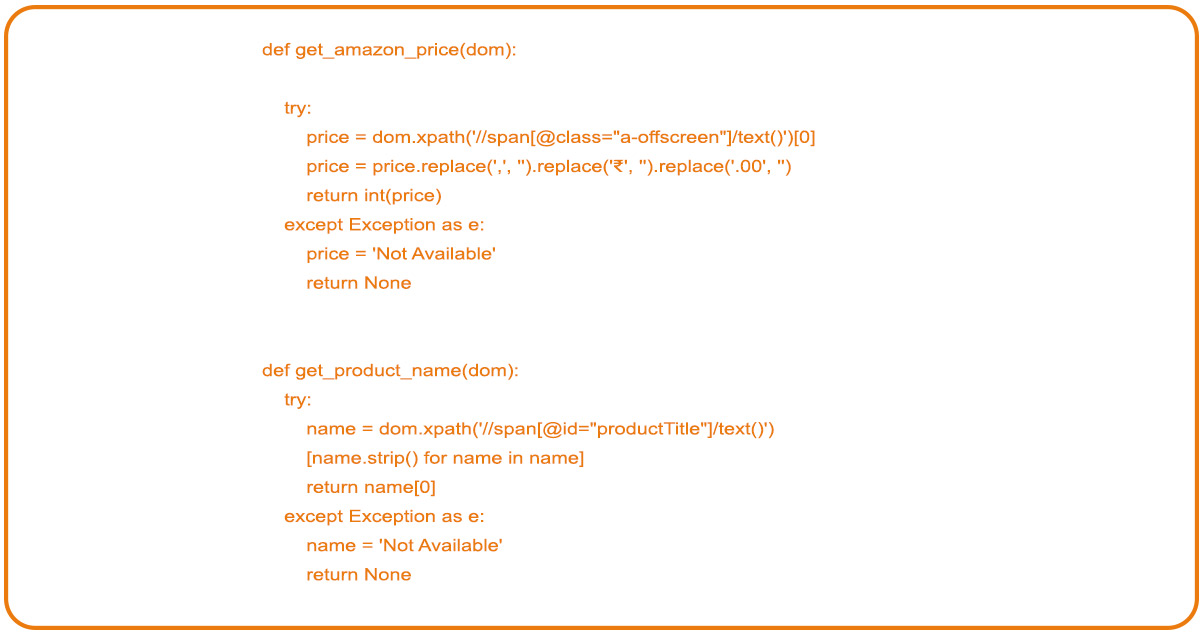

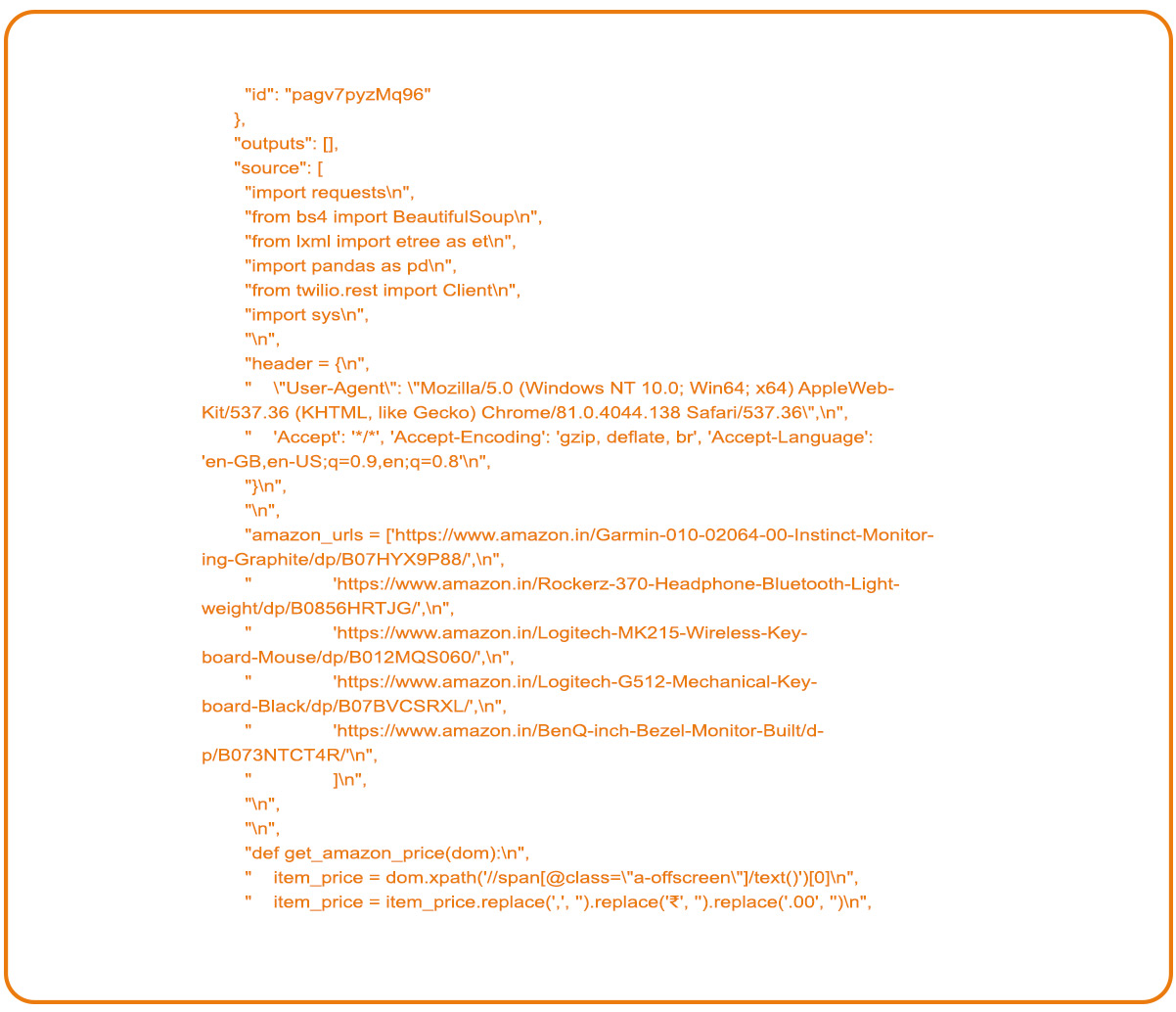

Using BeautifulSoup and lxml Python libraries, we'll add two functions to call and scrape Amazon pricing data for selected products. Then we will use Xpaths to locate elements on the page.

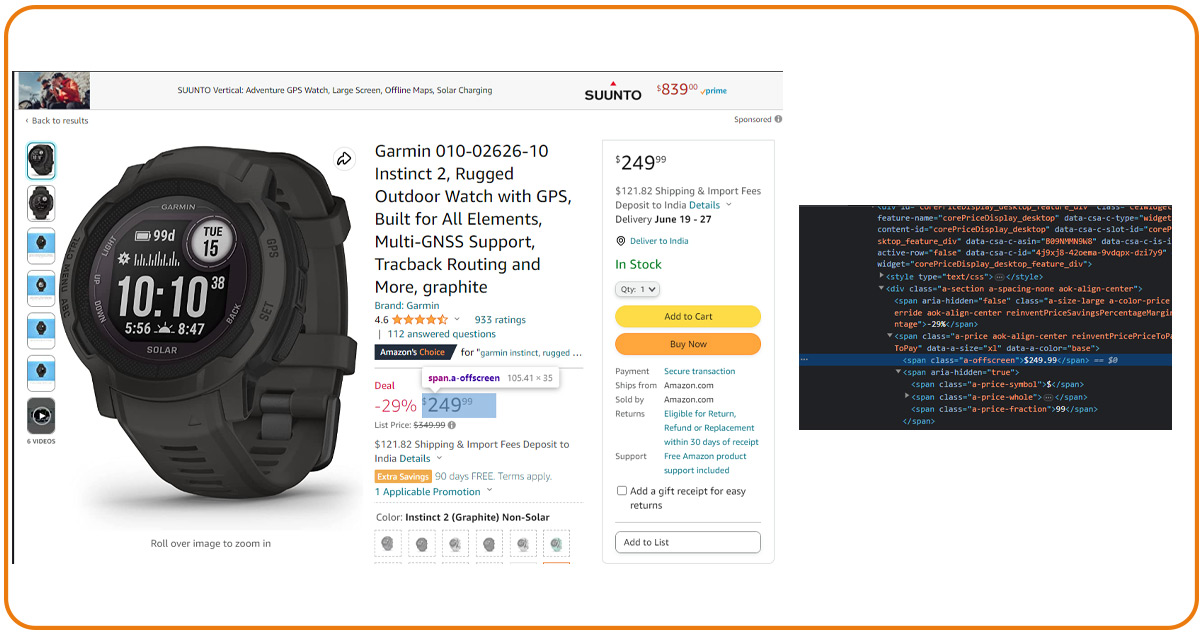

Check the following image, where you will open Chrome-based developer tools and choose product pricing. You will see the pricing data in an a-offscreen class in the span. Then, we use Xpaths to place the data and examine it with developer tools.

To check the price drop for selected products, we must scrape and compare Amazon product price data with master data. We must implement some string manipulation algorithms to gather the data in the expected format.

Data Writing for Master File Development

To add the scraped Amazon product data to the master file, we will use the CSV module of the Python program. Check the code below.

Please note the below ideas:

- The master data file contains three columns: product price, name, and URL.

- We explore the product list and parse each URL to get data.

- To allow needed gaps between every request, we add random delay time.

You can see the generated master_data.csv data file in the CSV format after running the above code snippets. You have to execute this code one time only.

Developing the Tool for Amazon Price Monitoring

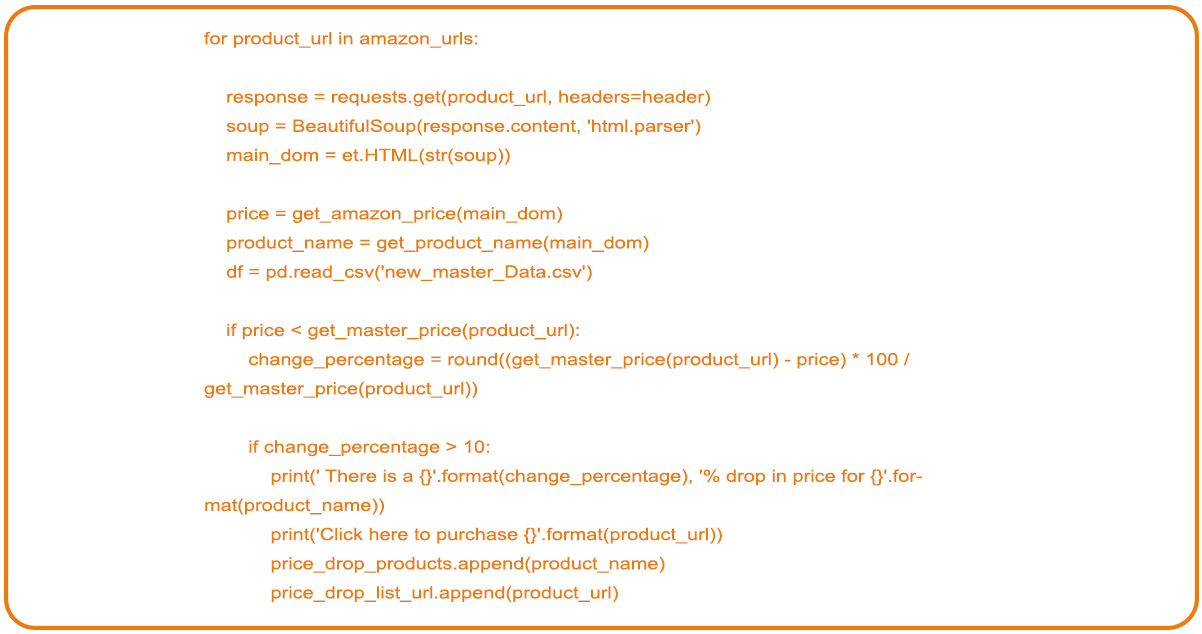

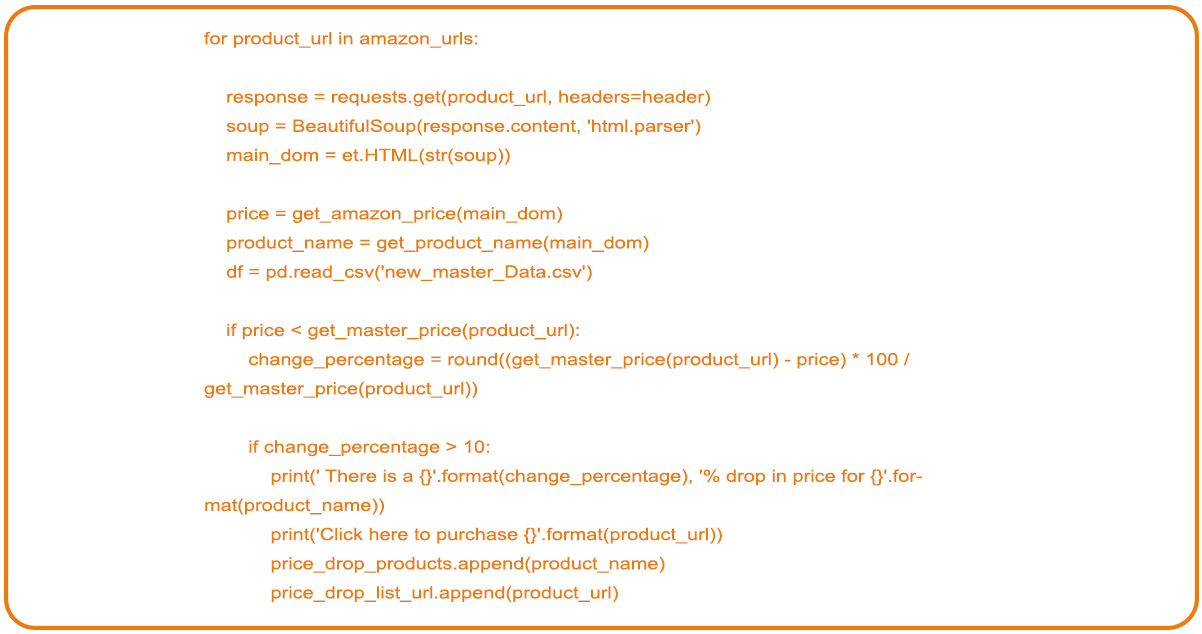

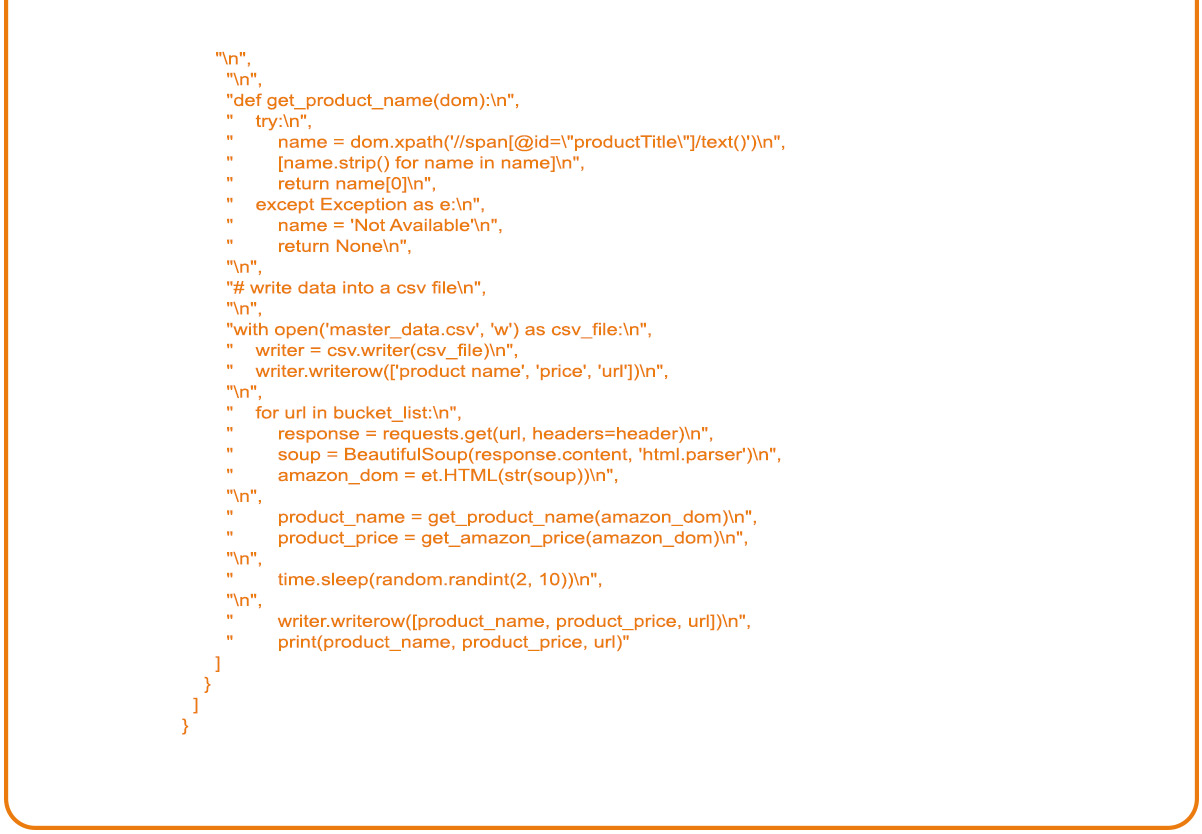

Now, we have completed the setup for master data to compare it with scraped data. Therefore it's time to build the second Python program to scrape Amazon product data for product prices and compare it with master data.

Import Python Libraries

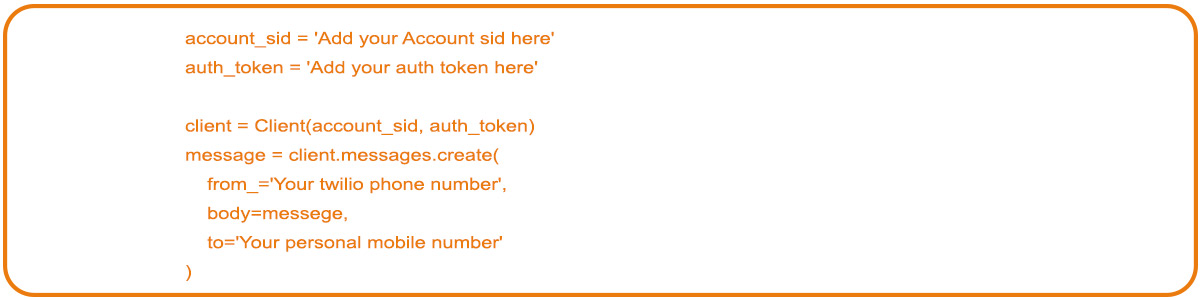

We must import Twilio and the pandas library from Python to develop the tracker code.

Pandas

We will use it as an open-source data manipulation and analysis library. It has a handy data frame package for the data structure. Pandas allow programmers to handle spreadsheets and other tabulated data formats inside the Python code.

Twilio

It simplifies sending SMS alerts programmatically. We prefer it because of its free use, which is sufficient for our project.

Starting the Amazon Data Collection Process

To accomplish the project, we'll reuse most of the above functions. However, we will use another function to grab the product price in master data for the link under the Amazon data extraction process.

To save products having price drops, we will define a couple of lists and save product names and URLs.

Start Checking Amazon Product Price Drops

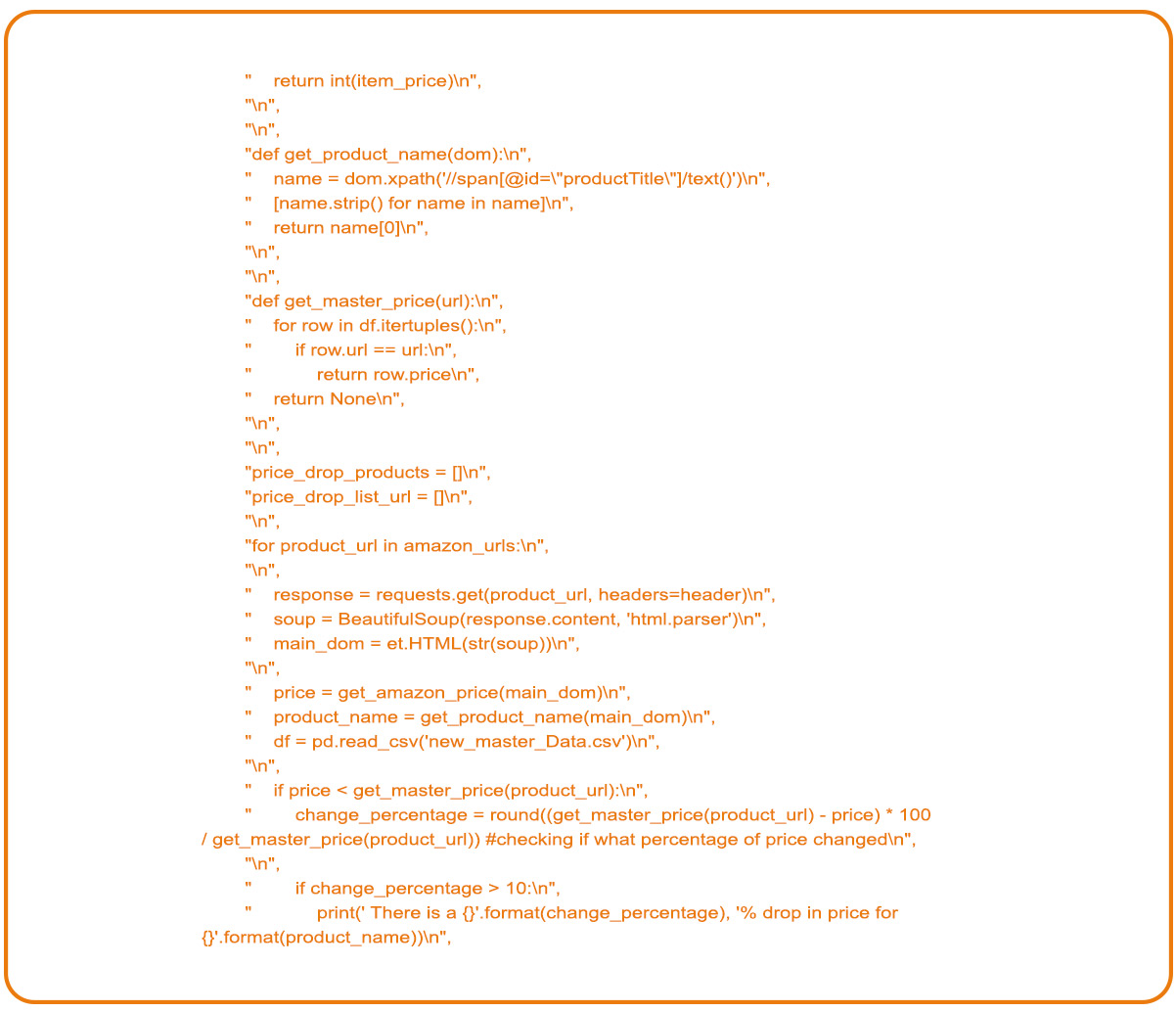

We'll walk through all pages, note the current selling price of selected products, compare it with the master file, and observe changes to see prices drop more than 10 percent. If so, we'll add the product with dropped prices to the defined list.

If we don't see any product with price changes, we will need an exit program and don't have to invoke the Twilio library.

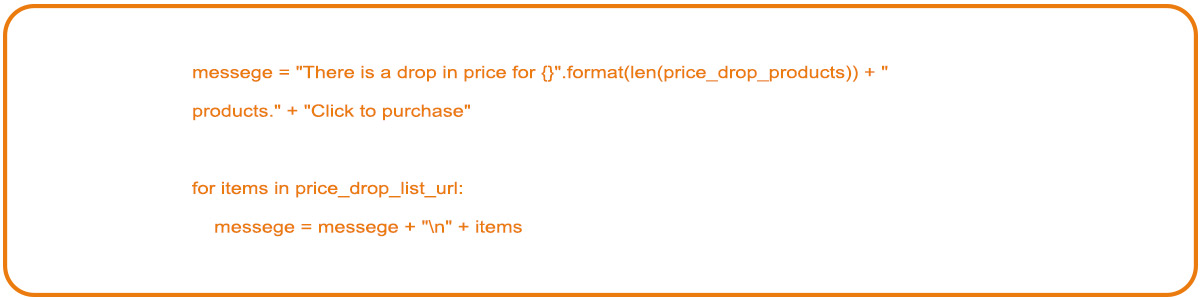

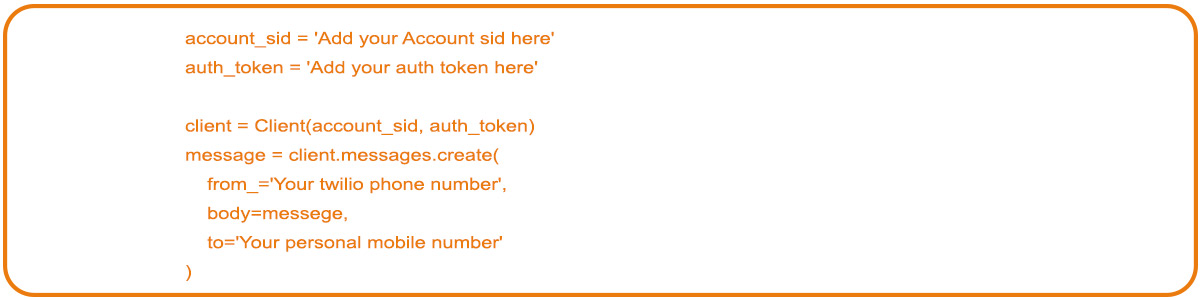

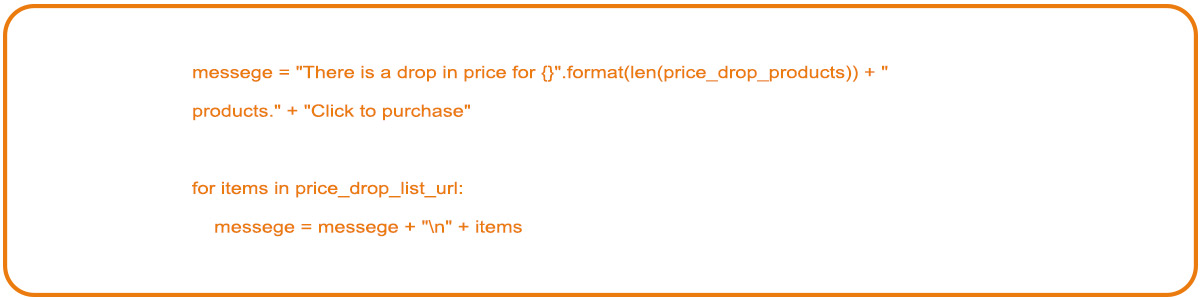

However, in case of price changes, we must invoke the Twilio library and send an SMS alert. The primary step is to create a message body with relevant content.

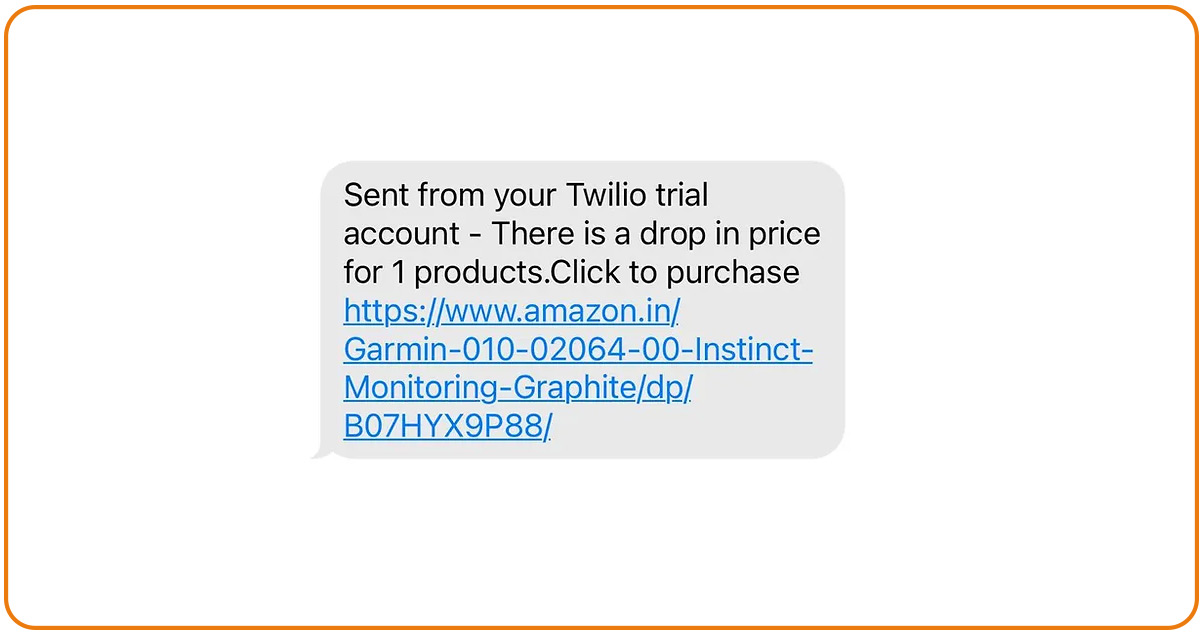

The above image shows the sample message body that we used. You can change it according to your wish and send different alerts.

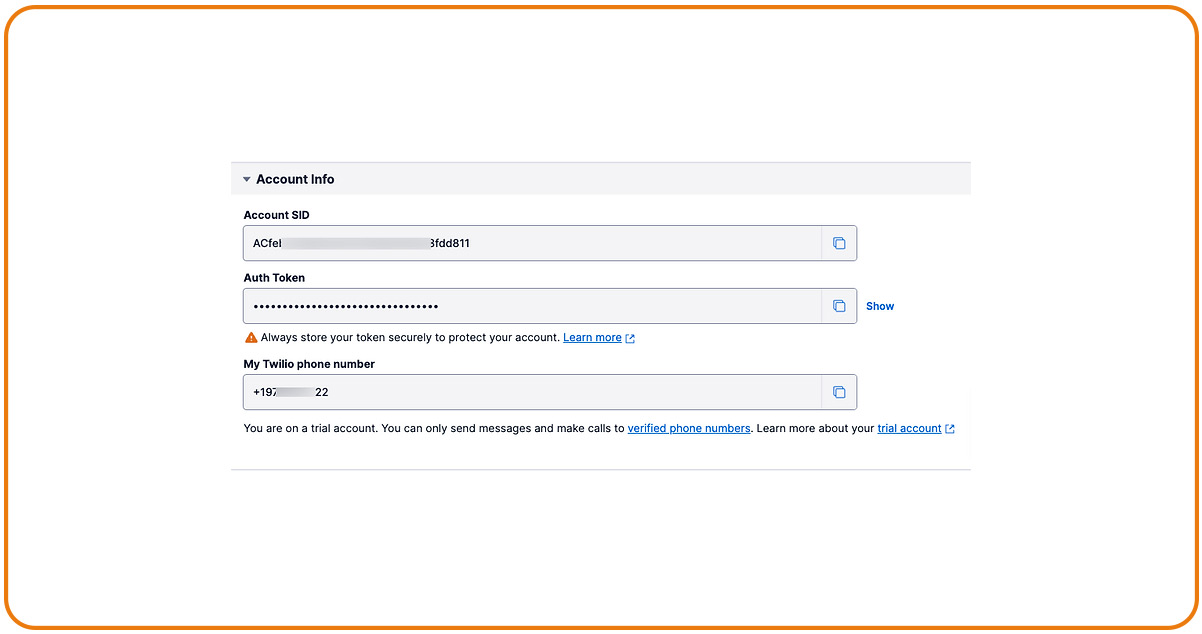

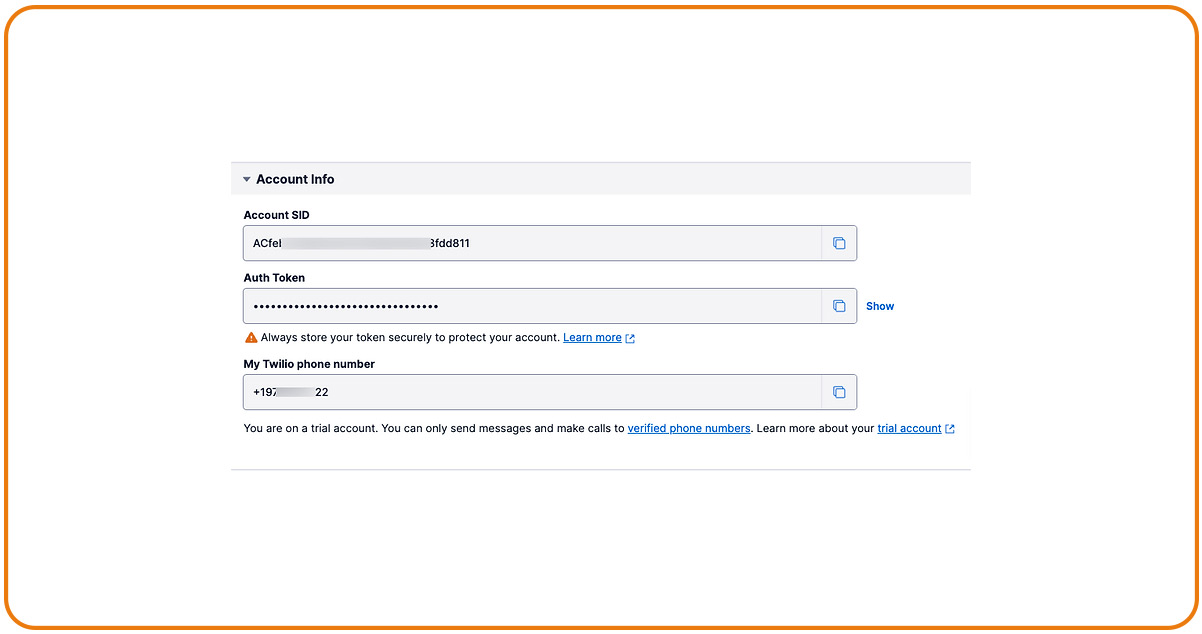

After that, we will register on Twilio and note the auth token and account SID.

Check the below image to see how it looks after registering and signing in.

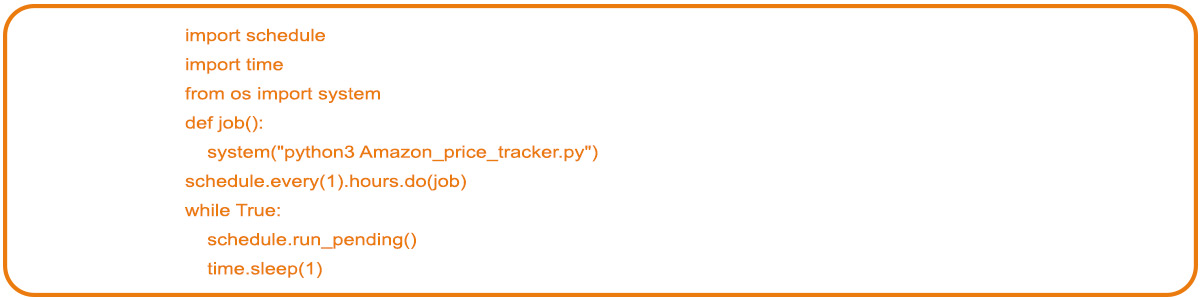

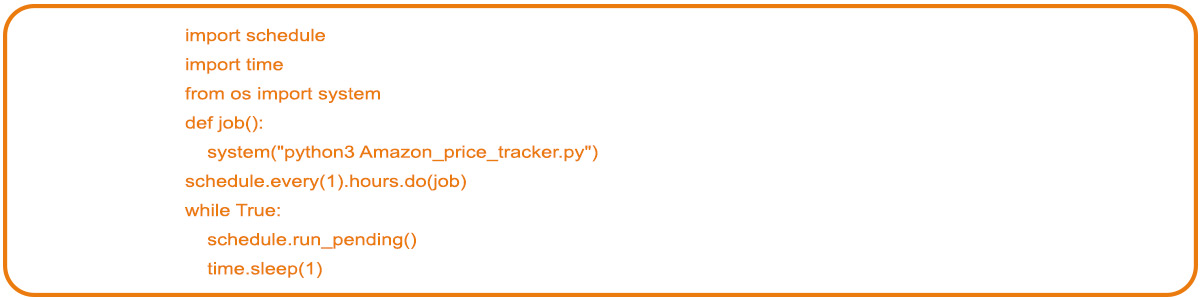

Automation of the Tool for Hourly Scraping

Running the scraper every hour is impractical due to other commitments manually. Therefore we will automate the Amazon product data scraping process with the required hourly frequency.

We will use the Schedule library from Python for this process. Here is an example code of the scheduling.

We will only execute the code once daily with an automated schedule, and the algorithm will provide the required Amazon price monitoring data each hour.

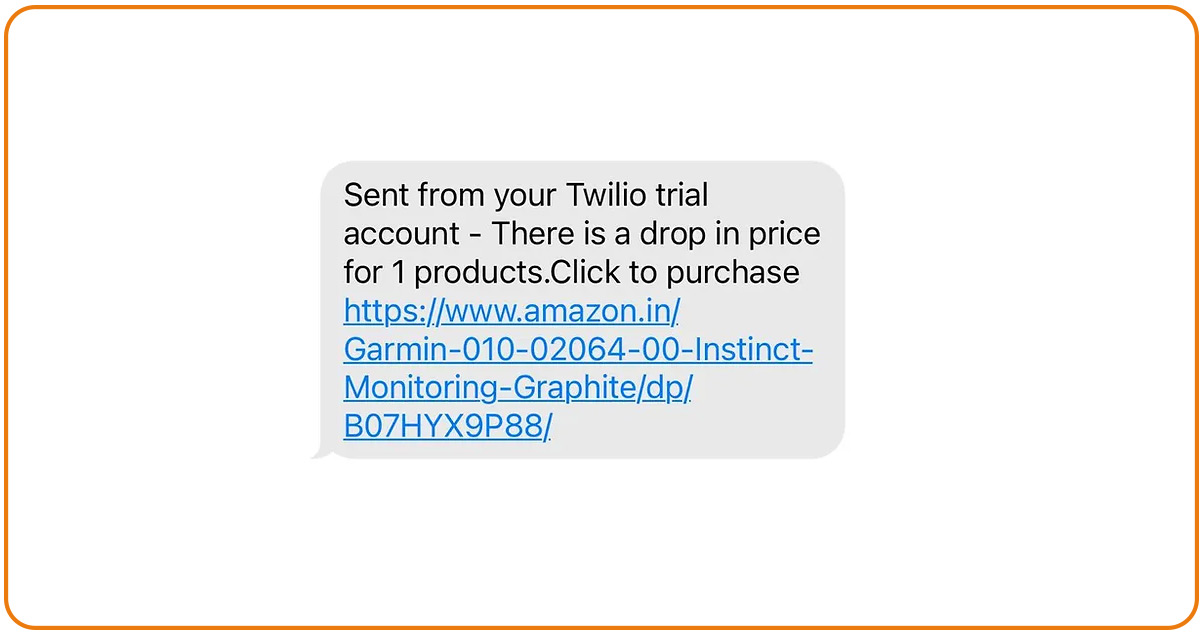

Program Testing

Change the product prices in the master file and execute the code. You will get an SMS alert if any product has reduced prices.

We have changed prices in the master data and executed the code. And we got the following SMS.

Conclusion

Here is how you can build an Amazon price monitoring tool to check product prices, compare them with expected prices, and buy products at affordable costs. Contact the Product Data Scrape team if you need help understanding the process or want a customized solution for ecommerce data scraping services.

.webp)