Introduction

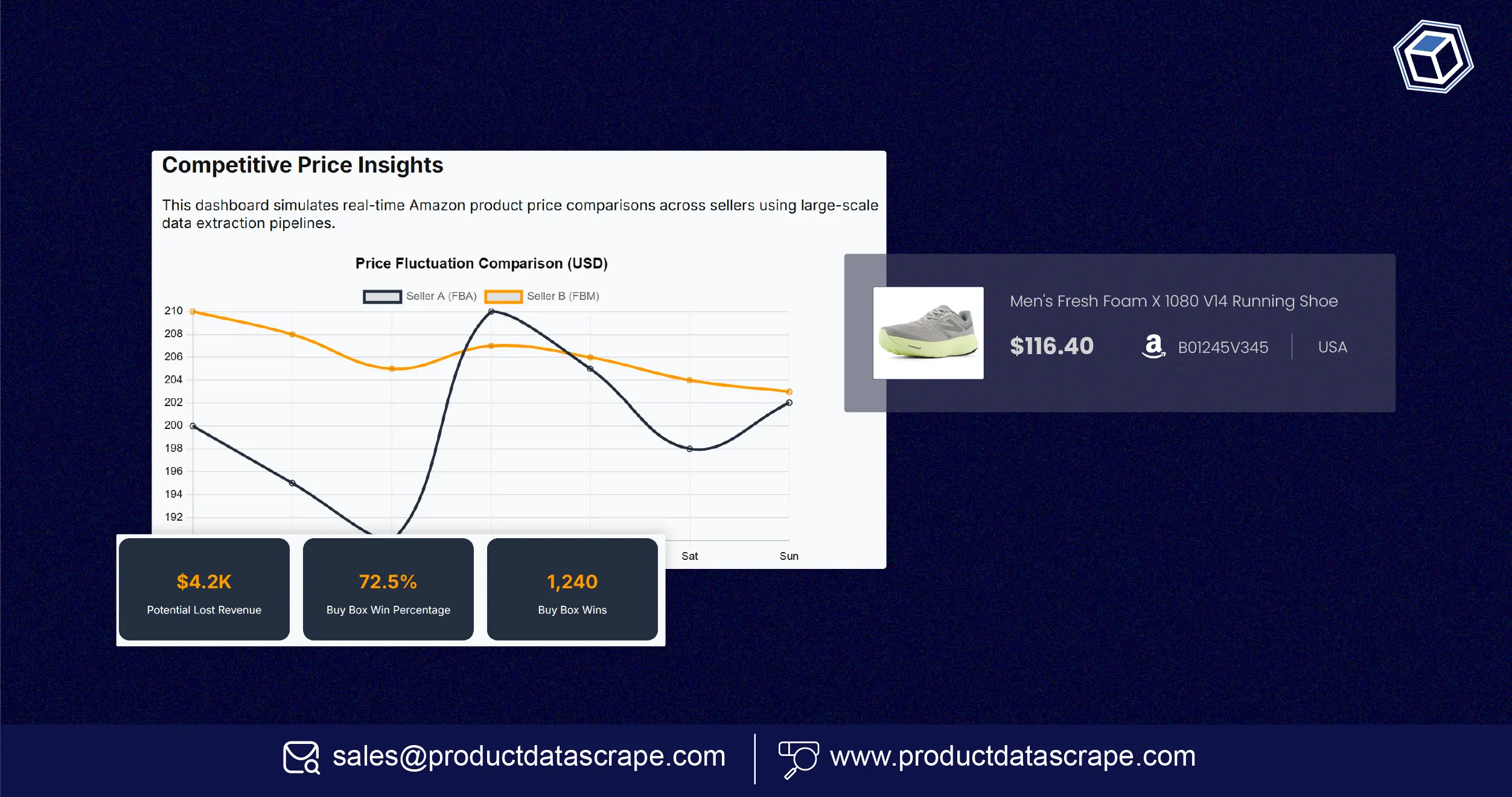

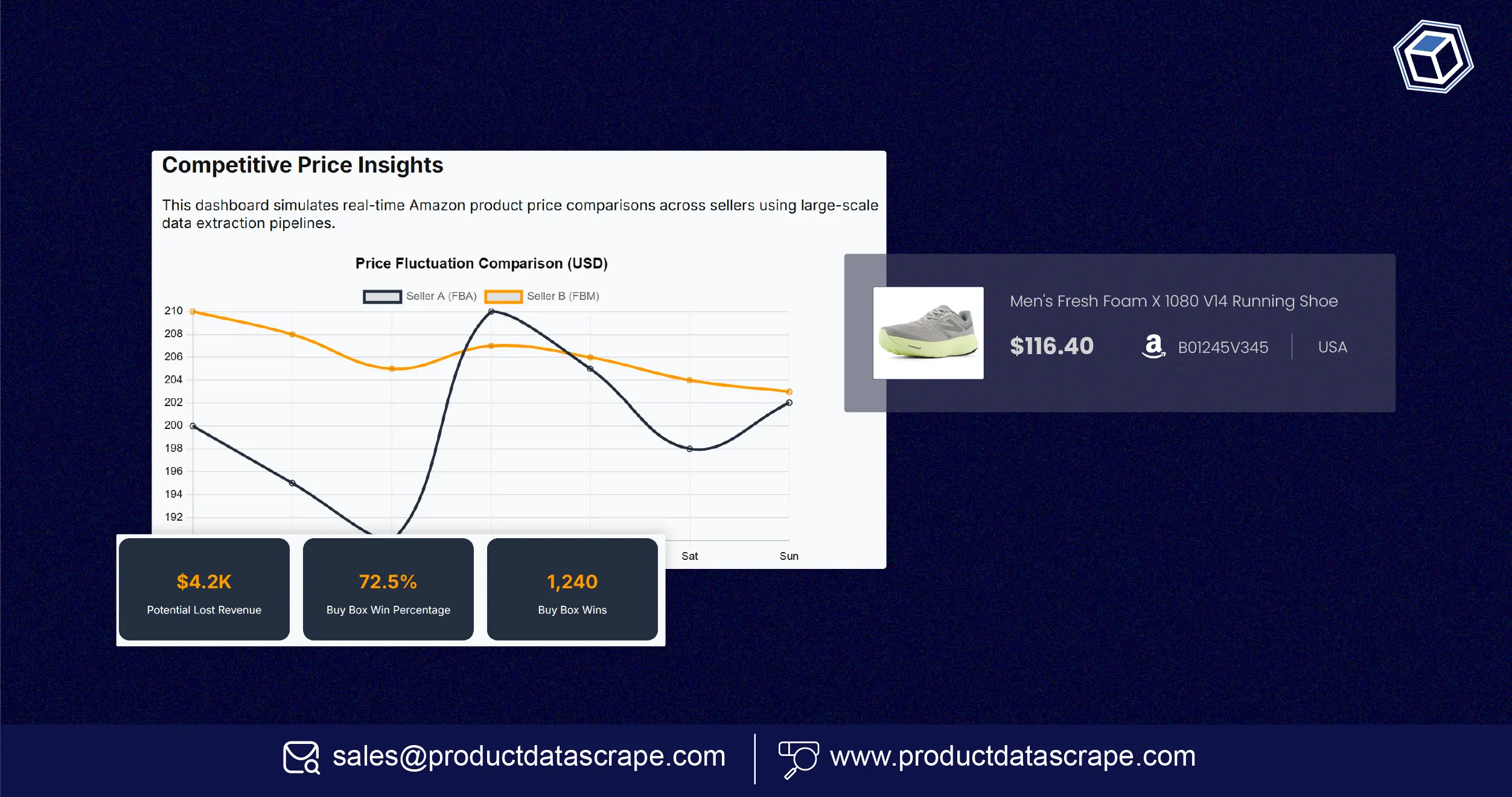

In a digital marketplace driven by precision and competition, Amazon price

scraping at scale for analytics has become the cornerstone of pricing intelligence.

With billions of SKUs, frequent price changes, and personalized offers,

Amazon’s pricing ecosystem is both a goldmine and a labyrinth for data analysts and pricing

strategists. Businesses today rely on accurate, timely, and structured pricing data to make

smarter pricing, assortment, and promotion decisions.

However, getting that data is not as simple as running a scraper overnight.

Amazon’s layered infrastructure, bot-detection systems, and dynamic rendering make automated

extraction complex and error-prone.

That’s where specialized web scraping service providers come in — bringing

scalable infrastructure, intelligent proxy management, and compliance frameworks to handle

Amazon’s evolving defenses.

For brands, retailers, and market analysts, web scraping Amazon for price

intelligence enables actionable insights into competitor strategies, Buy Box dynamics, and

pricing trends across geographies. It transforms raw HTML into structured, analytics-ready data

that fuels smarter decision-making.

Amazon Price Scraping: Harder Than It Appears

Amazon’s pricing environment changes thousands of times per minute — sometimes

per second. Dynamic repricing algorithms respond to competitor offers, user behavior, and

inventory signals. When companies attempt to extract this data manually or with generic tools,

they face ever-shifting pages, CAPTCHAs, and blocks.

Unlike static sites, Amazon generates much of its product data dynamically

through JavaScript, meaning simple crawlers often fail to detect real prices. Maintaining

accuracy requires continuous adaptation — a process too time-consuming for small teams.

Enterprise-grade providers handle these complexities through headless browsers, adaptive

parsers, and proxy orchestration.

Large-scale Amazon pricing data collection for analytics allows businesses to

track millions of ASINs efficiently without violating compliance standards. Such setups ensure

that every price is tied to its source, time, and region, creating verifiable datasets that

power pricing optimization models.

With the right web scraping strategy, companies gain not just visibility into

current prices but also trendlines over time, enabling predictive insights that go beyond

reactive price changes.

Why DIY Scripts and Free Tools Fail Quickly?

At first glance, scraping Amazon with open-source tools like Amazon Scraper

Python scripts seems tempting. They work for a few hours, maybe even a day — until IP bans,

throttling, or CAPTCHA loops appear. DIY scrapers aren’t built to handle session persistence,

rotating proxies, or regional variations, all of which are crucial for Amazon.

Once blocked, recovery becomes painful: you lose progress, compromise data

quality, and risk missing critical updates in a fast-moving market. Each fix demands new

engineering time, which adds up fast.

This is why relying solely on free tools for high-volume data extraction is

unsustainable. Managed web scraping solutions use distributed architectures, rotating

residential IPs, and self-healing parsers that automatically adjust to layout changes.

In contrast to short-lived scripts, these solutions deliver clean, ready-to-use

datasets for machine learning and analytics teams. When you need to scrape laptop data from

Amazon for an ML project, scalable infrastructure ensures complete, labeled datasets with

consistent schema — no manual patching or re-scraping.

Professionally managed pipelines free your team from maintenance headaches,

allowing them to focus on deriving insights instead of debugging scrapers.

Struggling with unreliable scrapers? Switch to Product Data Scrape for

accurate, scalable, and compliant Amazon pricing data — start today!

Contact Us Today!

What Professional Web Scraping Services Do Differently?

The difference between DIY scraping and managed web scraping is like comparing

a homemade drone to a satellite. Professional providers don’t just collect data — they guarantee

consistency, compliance, and scale.

Enterprise-level setups combine multiple technologies: rotating proxy pools,

browser automation, and real-time monitoring. Each request is optimized for efficiency and

anonymity, ensuring that scraping activities remain undetected while maintaining high success

rates.

Such systems can handle millions of requests daily, with adaptive load

balancing to avoid detection or blocking. They use structured extraction templates that evolve

automatically as Amazon updates its HTML structure.

When combined with real-time Amazon price scraping for market research, these

capabilities enable near-instant visibility into competitor pricing trends, promotions, and

market fluctuations. Businesses can feed this continuous stream of verified data into pricing

engines, enabling dynamic pricing and rapid decision-making.

By outsourcing scraping operations to experienced providers, brands gain access

to infrastructure, monitoring dashboards, and compliance expertise — turning data collection

into a predictable, scalable function rather than a maintenance burden.

Amazon’s Unique Obstacles Require Expertise

Amazon’s pricing pages are notoriously complex. Between geo-based localization,

A/B tests, and personalized recommendations, no two users always see the same price. That makes

Amazon price scraping at scale for analytics especially challenging.

Professional scraping teams overcome these hurdles with smart browser

automation, session simulation, and real-time validation pipelines. They maintain thousands of

active proxy IPs across countries, ensuring regional coverage and avoiding bias in pricing data.

Even minor HTML changes — like shifting a price container or modifying AJAX

calls — can break poorly designed scrapers. Expert teams monitor such changes continuously,

automatically redeploying parsers when required.

In addition, advanced techniques like headless Chrome rendering and anti-bot

fingerprinting ensure high success rates for web scraping Amazon products for analytics, even

when pages dynamically load after user interaction.

With the right tools, scrapers can extract clean, verified prices from millions

of listings daily — all without violating Amazon’s terms or impacting performance. Such depth of

automation allows data analysts to focus on insights, not maintenance.

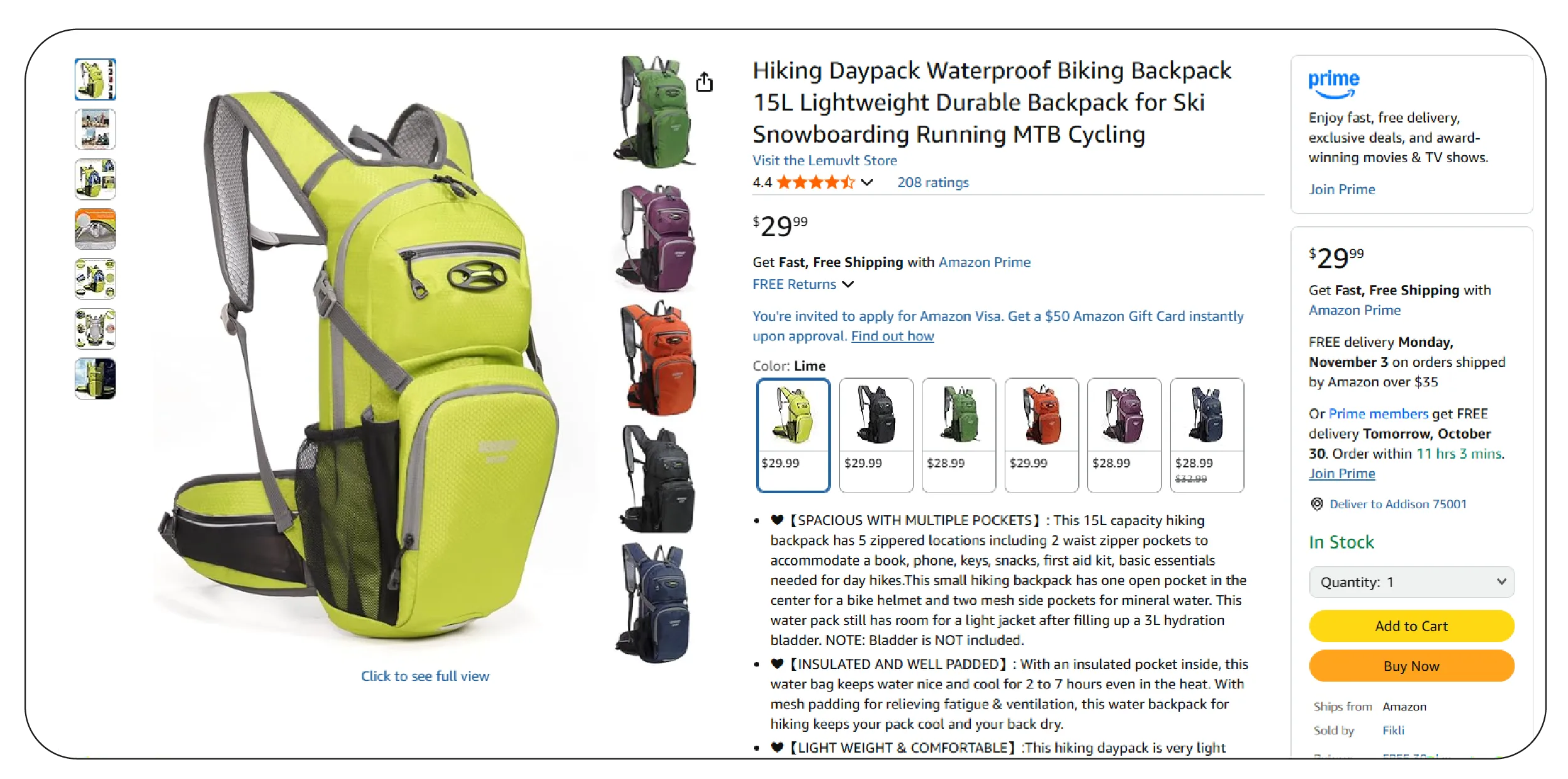

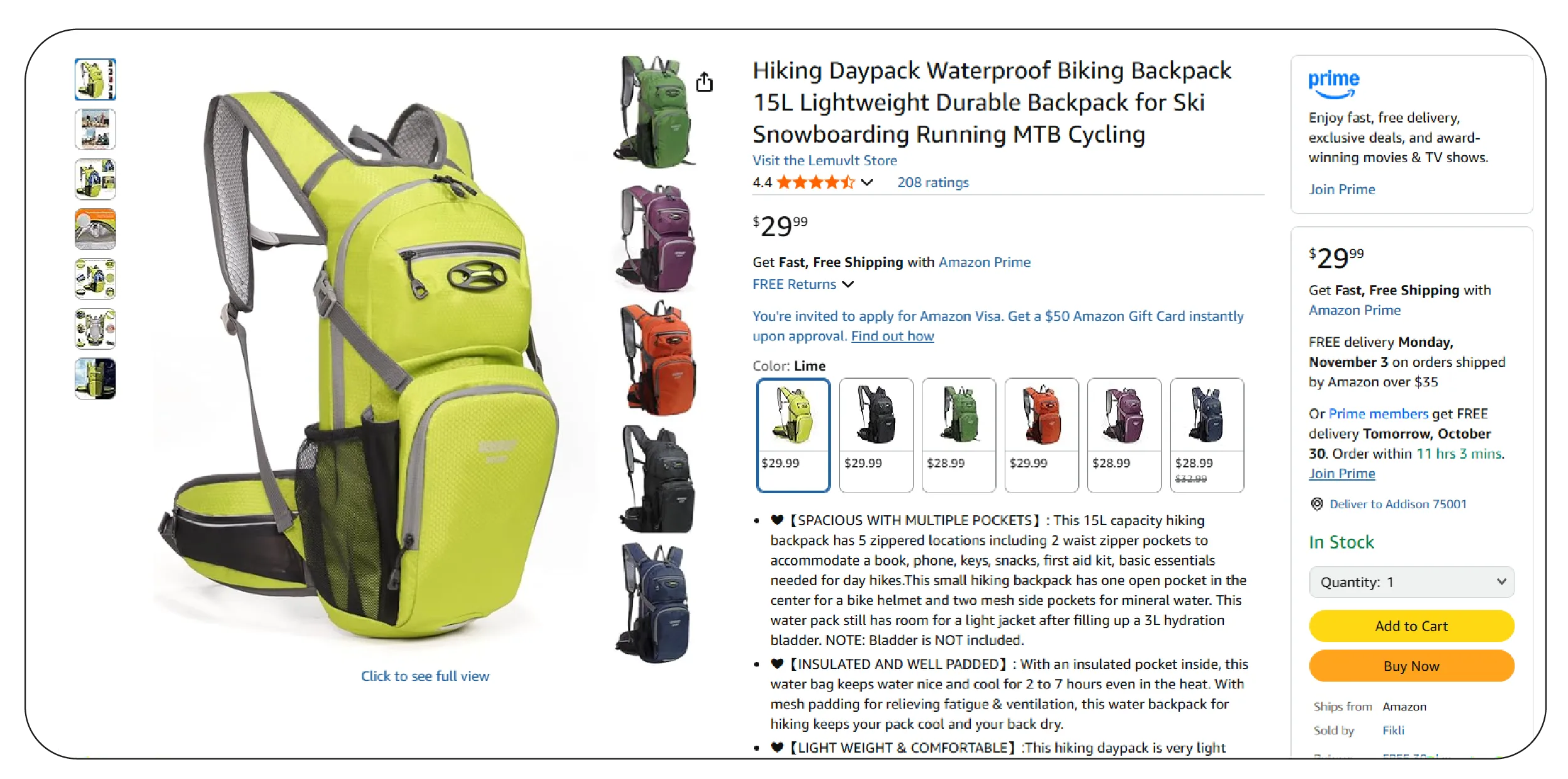

Real-World Applications: How Different Users Leverage Amazon Price Data

Different industries extract Amazon pricing data for unique strategic purposes

— from enforcement to analytics. For brands, it’s about maintaining control and consistency. By

continuously monitoring third-party listings, they can identify unauthorized sellers, detect MAP

(Minimum Advertised Price) violations, and protect brand equity. Automated scraping tools allow

them to track product availability, ratings, and competitor offerings across multiple regions.

For retailers, Amazon data acts as a mirror of market behavior. Monitoring

pricing changes helps in adjusting product margins, inventory management, and discount timing.

Retailers use continuous data feeds to respond faster than competitors and optimize promotional

campaigns dynamically.

For analysts and market researchers, historical and real-time datasets uncover

hidden pricing trends and customer demand patterns. With structured data pipelines, they can

train predictive models for forecasting demand, elasticity, and price sensitivity.

This is where scraping Amazon product prices efficiently becomes a

mission-critical capability — transforming unstructured web data into business-ready

intelligence.

By outsourcing data collection to experts, organizations gain access to

verified datasets they can confidently integrate into pricing models, dashboards, and reporting

systems without worrying about data gaps or format inconsistencies.

Unlock actionable insights from Amazon pricing! Partner with Product

Data Scrape to monitor competitors, optimize pricing, and drive smarter

decisions.

Contact Us Today!

Why Scaling Price Scraping Changes Everything?

Collecting prices from a few hundred listings is easy; maintaining accurate

datasets across millions of SKUs daily is where scaling truly matters. Amazon price scraping at

scale for analytics requires not just powerful crawling architecture but also a deep

understanding of how Amazon’s infrastructure behaves under load.

When scaled correctly, scraping pipelines ensure continuous freshness, meaning

your datasets reflect the latest market conditions, promotions, and competitor pricing changes.

Without this level of scale, pricing analytics quickly become outdated and misleading.

A professional provider uses intelligent scheduling systems to prioritize

high-value pages, minimize redundant crawls, and optimize proxy usage for efficiency. This

reduces infrastructure costs while maintaining high accuracy levels.

Integrating scraping pipelines with machine learning models further strengthens

price forecasting and elasticity studies, enabling teams to make proactive adjustments.

In parallel, organizations that scrape data from any e-commerce websites often

apply these scalable architectures beyond Amazon, creating unified datasets for omnichannel

price analytics across major marketplaces and online stores.

Scaling isn’t just about volume — it’s about maintaining speed, reliability,

and compliance across complex, dynamic e-commerce ecosystems.

1. Beyond “Requests per Minute”: The Metric That Actually Counts

Many scraping teams measure success by how fast they can send requests. But for

professional data operations, true performance is measured by cost per valid, verified price —

the metric that determines both efficiency and profitability.

To reach this standard, you must design pipelines that deliver a high ratio of

clean, usable data to total requests sent. This involves managing retries, optimizing

concurrency, and using adaptive logic that detects when a scraper is about to be blocked.

Well-designed architectures can handle billions of requests monthly with

minimal waste. They dynamically adjust scraping intensity based on proxy health, target load,

and error feedback loops.

With structured extraction and data quality audits, each price record becomes

reliable enough for analytics teams to act upon it.

By using frameworks designed to extract Amazon e-commerce product data , teams

can guarantee data accuracy across regions and categories while minimizing resource overhead.

Ultimately, cost-effective scalability ensures pricing teams can operate

continuously without overspending on bandwidth, proxies, or compute cycles — turning data

extraction into a predictable business process.

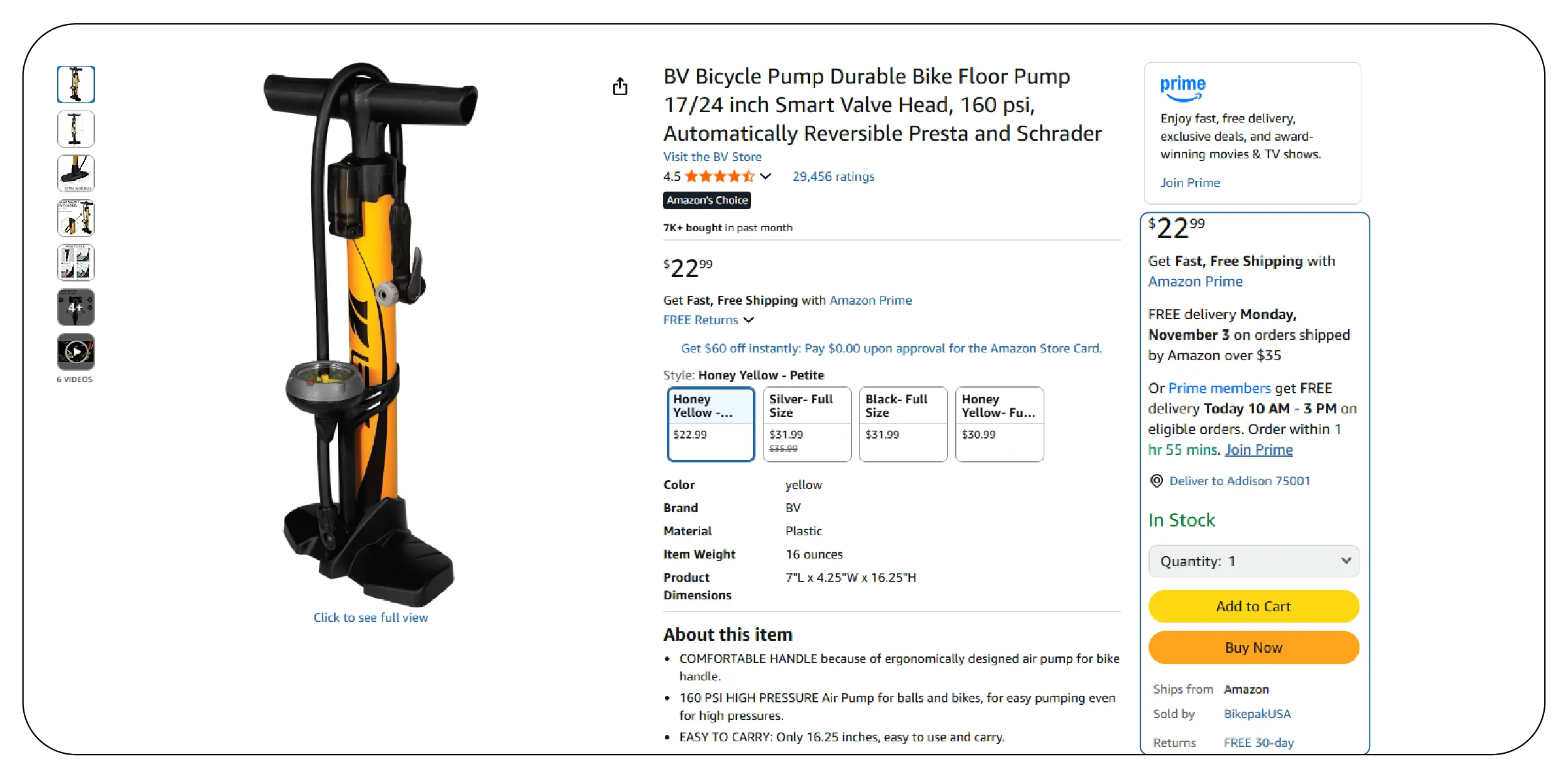

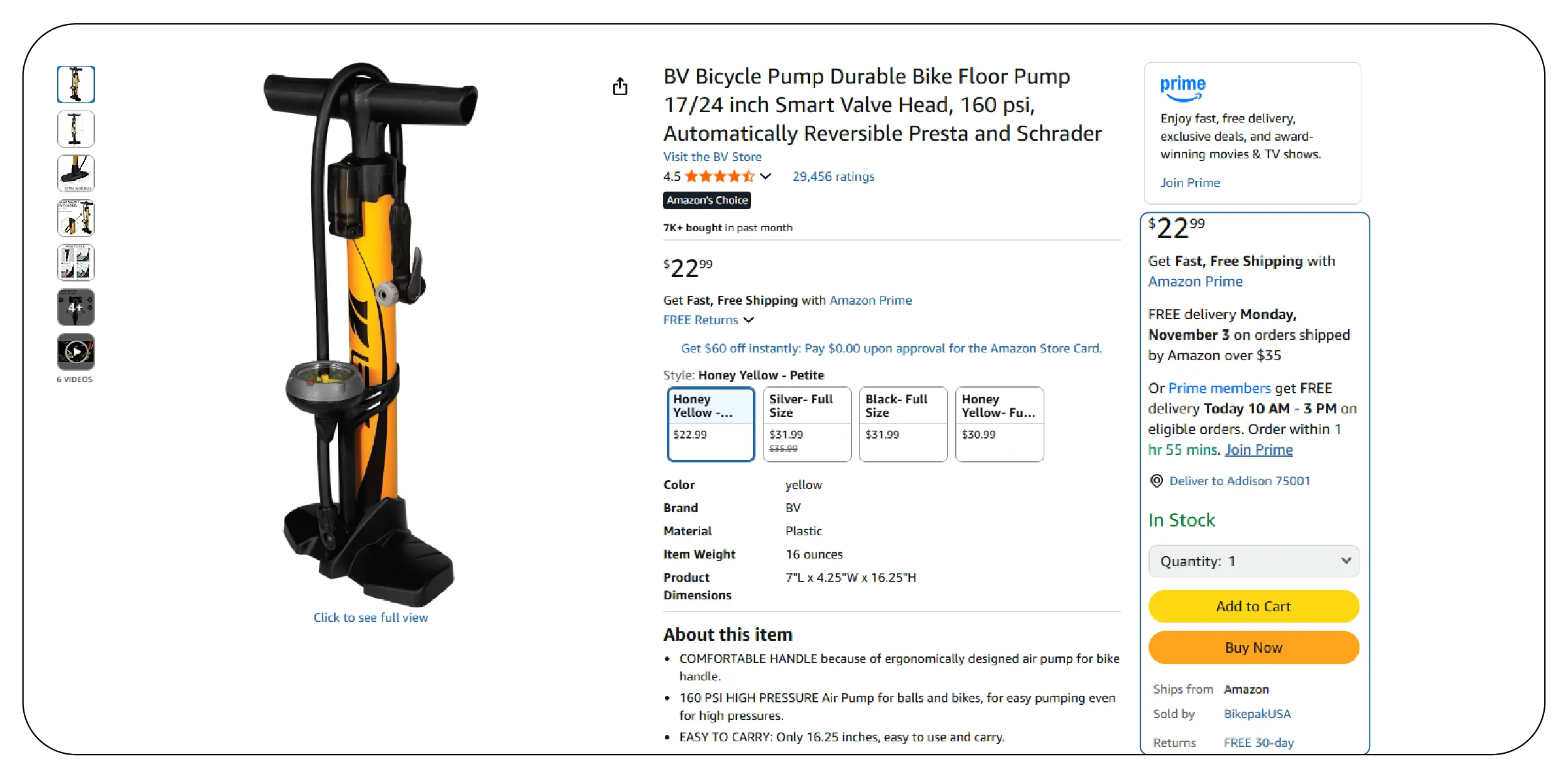

2. Buy Box Validation: Knowing Which Price to Trust

Amazon’s Buy Box is one of the most influential pricing elements in global

e-commerce. Winning it means higher visibility and conversions — but identifying the true Buy

Box price programmatically is complex.

Different sellers compete for that position, and price alone doesn’t guarantee

the win; factors like shipping time, seller rating, and fulfillment method all play a part.

Scraping systems must identify the right context to determine which offer currently “owns” the

Buy Box.

Advanced scraping workflows detect subtle page variations that mark the

difference between standard listings and Buy Box-winning ones. They then tag and store this

metadata for further analysis.

This process, when automated at scale, ensures you aren’t comparing outdated or

irrelevant offers.

With enterprise-level scraping infrastructure, you can custom e-commerce

dataset scraping to focus specifically on Buy Box visibility, seller distribution, and price

movements across time.

The result: validated, context-aware datasets that support accurate revenue

modeling and competitive response strategies.

3. PA-API vs. Scraping: When to Blend or Bypass

Amazon’s Product Advertising API (PA-API) provides structured data for approved

partners — but its limitations become clear once you need comprehensive, real-time coverage. The

API restricts access to specific products and often updates with delays, meaning that data

freshness is not guaranteed for critical decision-making.

On the other hand, scraping offers full visibility into live listings, dynamic

prices, and availability indicators. The best strategy blends both approaches — using PA-API for

reliable metadata and scraping for immediate price verification and real-time insights.

Modern providers automate this reconciliation process, ensuring both sources

stay synchronized while preserving compliance and data accuracy.

When businesses extract Amazon API product data in parallel with live scraping,

they get the best of both worlds — structured, policy-compliant data enriched by current,

verified price points from Amazon’s front end.

This hybrid model is especially valuable for analytics-driven organizations

that need speed, completeness, and confidence in every data point before applying insights to

pricing models or dashboards.

4. Geo-Mobile Parity Testing: Seeing What Your Customers See

Amazon’s pricing can differ subtly by geography, device type, and even account

behavior. A buyer in London might see a different price than one in Delhi, and mobile shoppers

might get exclusive discounts unavailable on desktops.

To ensure pricing parity, businesses conduct geo-mobile testing — scraping

Amazon from multiple countries, devices, and user sessions simultaneously. This allows analysts

to detect inconsistencies, regional promotions, or localization bugs that may distort pricing

insights.

Enterprise-grade infrastructure uses residential proxies, real-device

simulations, and geo-targeted browser configurations to ensure authentic data collection. This

approach captures real-world customer experiences with precision.

By integrating these workflows, teams can build datasets that reflect the

entire spectrum of Amazon’s dynamic behavior.

For instance, Web Data Intelligence API frameworks help capture, unify, and

normalize such multi-regional data in a way that’s both compliant and analytics-ready —

supporting global parity audits and market-specific strategy optimization.

These tests reveal whether shoppers truly see consistent prices — or if your

pricing data needs regional calibration before being used in strategic analysis.

5. Proxy Pool Health: The Hidden Cost of Decay

Even the most robust scraping operations depend on one thing — proxy stability.

Over time, proxies degrade, get blacklisted, or lose speed. A decaying proxy pool silently

inflates costs by causing retries, failed captures, and incomplete datasets.

Monitoring pool health is therefore critical for sustainable operations.

Providers track latency, block rates, and response times, dynamically rotating underperforming

proxies to maintain quality.

Continuous diagnostics ensure optimal performance and cost efficiency. Some

advanced providers use machine-learning algorithms to predict which IPs are likely to fail soon,

removing them pre-emptively from rotation.

Maintaining this balance allows large-scale crawlers to operate seamlessly

without human intervention, even under Amazon’s complex anti-bot measures.

Companies seeking scalable, cost-effective scraping infrastructures often turn

to pricing intelligence services to manage proxy orchestration and data-pipeline optimization.

Healthy proxy systems are the backbone of any long-term price scraping

strategy, directly influencing data validity, speed, and overall cost per verified record.

6. Handling Dynamic Pages: When Prices Appear Only After User Actions

Some Amazon product pages don’t reveal actual prices immediately — they appear

only after actions like selecting configurations or clicking “See price in cart.” Capturing

these requires advanced, session-aware automation capable of simulating real user flows.

Without this capability, scrapers miss critical data or capture incomplete

prices that misrepresent the marketplace.

Session management, JavaScript rendering, and event-based triggers are the keys

to reliable dynamic scraping. Headless browsers like Playwright or Puppeteer can mimic real

interactions while maintaining efficiency and stealth.

Enterprise scraping providers orchestrate these processes at scale, managing

thousands of sessions in parallel while maintaining compliance.

When such pipelines are integrated with analytics frameworks, they reveal not

only current prices but also hidden offer patterns or discount mechanics used in specific

product categories.

These capabilities, combined with competitor price monitoring services , give

organizations a decisive edge — enabling continuous visibility into changing prices, competitor

tactics, and Buy Box dynamics across categories and regions.

7. Freshness SLAs: Speed vs. Accuracy in Price Updates

In a hyper-competitive marketplace, data loses value quickly if it’s not fresh.

A price that’s 12 hours old might already be outdated due to flash discounts or automated

repricing. That’s why defining freshness SLAs (Service-Level Agreements) is critical.

The frequency of price updates depends on business needs — hourly for

competitive electronics, daily for household goods, or real-time for volatile product segments.

Freshness directly impacts decision accuracy, forecasting reliability, and promotional timing.

Enterprise scraping providers maintain automated refresh pipelines that

prioritize high-change categories first, ensuring time-sensitive updates reach analytics systems

quickly. These pipelines can auto-detect when a listing changes and schedule immediate recrawls,

keeping your dataset perpetually up-to-date.

When powered by AI-driven scheduling systems, this approach balances cost and

responsiveness while avoiding redundant crawling.

To further enhance efficiency, teams often integrate Amazon product

intelligence e-commerce dataset models to measure freshness and coverage across categories.

These models ensure every dataset meets defined latency and completeness benchmarks, delivering

trustworthy insights for pricing and inventory strategy decisions.

8. Ensuring Data Integrity: From Raw HTML to Reliable Price Feeds

Raw HTML is messy — full of nested tags, inconsistent formats, and dynamic

content. Converting it into structured, reliable data requires advanced validation and cleansing

workflows. Data integrity ensures that what analytics teams consume reflects the real market

situation.

Professional providers apply schema validation, outlier detection, and

cross-referencing against historical records to verify every captured price. They flag anomalies

and re-scrape automatically when inconsistencies appear.

Maintaining this integrity is crucial for downstream analytics, where even a 2%

error rate can distort revenue projections.

Many providers automate these processes through machine-learning-based quality

checks that continuously learn from past errors and improve extraction accuracy.

For example, when teams extract Amazon API product data , integrity validation

ensures that scraped values align with product metadata such as ASIN, title, and region —

creating a seamless, analytics-ready feed.

This high-fidelity approach enables businesses to build confidence in their

data pipelines and make informed pricing or inventory decisions based on verified, trustworthy

inputs.

9. Compliance and Transparency: Staying Clear of Legal Gray Zones

Responsible data collection is not just about efficiency — it’s about ethics

and compliance. Amazon enforces strong data-access policies, and professional scraping providers

build frameworks that respect those boundaries while delivering value.

Compliance involves honoring rate limits, protecting personal data, and

maintaining transparency in how crawlers interact with Amazon’s platform. Reputable providers

document their data-collection methods, audit processes, and adhere to international privacy

regulations like GDPR and CCPA.

This ensures organizations benefit from analytics without exposing themselves

to regulatory or reputational risks.

Transparent reporting further enhances trust: clients can track exactly how,

when, and from where data was extracted.

Some teams leverage Web Data Intelligence API-based compliance layers that

verify crawl behavior, manage consent mechanisms, and guarantee ethical data sourcing.

By prioritizing compliance, businesses ensure longevity in their data

operations and preserve positive relationships with platforms while accessing high-quality,

reliable market insights.

10. Managing Costs Without Compromising Accuracy

At enterprise scale, web scraping infrastructure costs can spiral quickly if

not optimized. Factors such as proxy consumption, compute cycles, and redundant requests can

inflate budgets. The key is balancing accuracy with efficiency.

Adaptive routing systems evaluate which pages require immediate crawling and

which can wait — ensuring every dollar spent delivers actionable intelligence. These policies

reduce operational waste while maintaining precision in pricing analytics.

Scalable pricing pipelines use elastic infrastructure that automatically

expands during high-demand periods and contracts during idle times. This approach aligns costs

directly with data needs, preventing overspending.

Smart caching strategies also play a major role, preventing unnecessary repeat

crawls and accelerating data delivery for analytics teams.

By implementing these frameworks, providers make it possible to extract Amazon

e-commerce product data with consistent accuracy while keeping infrastructure spend predictable.

This cost-control strategy transforms scraping from a reactive IT task into a

disciplined, financially accountable data operation that continuously delivers measurable ROI.

11. Engineering for Change: Resilient Patterns That Outlast Amazon’s Updates

Amazon’s front-end structure changes frequently — sometimes multiple times a

week. New HTML layouts, JavaScript injection, or asynchronous price rendering can easily break

static scrapers. Sustainable data collection depends on resilient engineering patterns that can

adapt without constant manual intervention.

Professional providers build modular parsers that detect structural shifts

automatically and retrain themselves using machine learning. These parsers identify anomalies in

HTML tags, DOM hierarchies, or XPath locations and self-adjust to extract the same field

consistently.

Continuous monitoring frameworks detect sudden drops in data volume or quality

and trigger automated repair processes. This proactive engineering ensures uninterrupted data

flow even as Amazon evolves its UI or content delivery systems.

When organizations scrape data from any e-commerce websites using self-healing

architectures, they future-proof their operations against constant platform updates —

maintaining accuracy, stability, and compliance in the face of ongoing change.

Ultimately, resilience in scraping pipelines isn’t just about technical

robustness; it’s about preserving the continuity of insight, ensuring data keeps flowing to

analytics systems even when the marketplace shifts underneath it.

12. From Data to Decisions: The Analytics Playbook

Raw data alone offers little value without contextual interpretation. Once

pricing datasets are extracted, they must be normalized, aggregated, and visualized to deliver

actionable intelligence. This transformation process turns static information into competitive

advantage.

Analysts blend historical pricing data with inventory levels, review trends,

and seasonal patterns to forecast future demand and price elasticity. Machine learning models

identify outliers, detect trends, and simulate how small pricing shifts impact conversion rates

and revenue.

Retailers use these insights for dynamic repricing — automatically adjusting

prices based on competitor actions, time of day, or product availability.

With integrated pipelines, businesses can extract Amazon e-commerce product

data and feed it directly into BI dashboards, data warehouses, or predictive models for instant

analysis.

The result is a closed-loop system: scraped data flows into analytics engines,

insights drive strategy, and feedback loops continuously refine models — empowering teams to

make smarter, faster pricing decisions with precision and confidence.

Build or Buy? Choosing Your Amazon Price Data Strategy

Every organization eventually faces the classic build-versus-buy decision:

should you develop an in-house scraping infrastructure or rely on a managed service provider?

Building internally gives complete control but requires dedicated engineering,

proxy management, compliance handling, and continuous maintenance. Over time, the hidden costs

of debugging, scaling, and updating parsers often outweigh the initial savings.

Buying, on the other hand, provides immediate scalability and compliance.

Managed service providers handle everything — proxy rotation, parser adaptation, error

management, and delivery pipelines — so your teams can focus on analysis instead of upkeep.

For many organizations, the hybrid approach works best: internal data teams

define goals and governance while external providers execute the heavy lifting of extraction.

Partnering with experts who offer pricing intelligence services ensures that

your pricing insights remain accurate, compliant, and timely — while freeing your internal teams

to innovate and act on those insights rather than maintaining the tools behind them.

Deciding between DIY or managed scraping? Choose Product Data Scrape for

reliable, scalable, and compliant Amazon pricing data today!

Contact Us Today!

Trends to Watch in 2025: The Future of Amazon Price Scraping

The landscape of web scraping and data analytics is evolving rapidly. As

Amazon’s platform continues to grow in complexity, new technologies and ethical standards are

transforming how data is collected, processed, and analyzed. The future of Amazon price scraping

lies in smarter automation, compliance, and sustainability.

Artificial intelligence will play a central role in 2025. Expect broader

adoption of AI-driven extraction models capable of adapting to layout changes in real-time.

These systems will continuously learn from structural variations in Amazon’s pages, ensuring

uninterrupted data flow and higher accuracy — even as HTML patterns evolve.

Ethical scraping frameworks will also gain traction. Transparency, compliance,

and consent-based data sourcing will become essential components of any large-scale scraping

operation. Businesses will prioritize legal compliance under GDPR and CCPA while maintaining the

integrity and privacy of collected data.

Machine learning will redefine accuracy benchmarks. Automated validation

systems will detect anomalies, flag outliers, and self-correct extraction errors across millions

of listings. This will make datasets cleaner, more reliable, and analytics-ready from the moment

they are captured.

Another key trend is sustainability in data operations. As environmental

awareness increases, scraping providers will focus on reducing bandwidth waste, optimizing proxy

usage, and minimizing energy consumption — turning efficiency into a measurable competitive

advantage.

The rise of Custom eCommerce Dataset Scraping will allow

companies to design data feeds around specific business KPIs — such as promotional response

times, price elasticity, or product lifecycle tracking — while maintaining full compliance and

precision. This customization will enable businesses to extract only what matters most, reducing

both noise and cost.

2025 will mark a major turning point where price scraping becomes more than a

technical necessity — it becomes a core pillar of competitive intelligence. Businesses that

integrate scalable, ethical, and intelligent scraping pipelines will lead the next era of

eCommerce analytics.

Conclusion

In today’s digital economy, success depends on speed, accuracy, and

adaptability. Amazon price scraping at scale for analytics is no longer a side

project — it’s a business-critical function that fuels data-driven decision-making.

With a professional web scraping partner, organizations can ensure compliance,

reduce costs, and access a continuous stream of clean, verified, and analytics-ready Amazon

data. This enables smarter pricing, improved competitiveness, and measurable ROI.

Whether you’re a brand monitoring MAP violations, a retailer optimizing

promotions, or an analyst forecasting demand trends, the future belongs to businesses that

combine automation, intelligence, and scale in their data strategy.

Partner with Product Data Scrape — the trusted leader in

large-scale eCommerce data extraction. We help you collect, clean, and deliver Amazon pricing

data that drives measurable results. Contact Product Data Scrape today to power

your analytics with precision, compliance, and scalability.

.webp)

.webp)

.webp)