Quick Overview

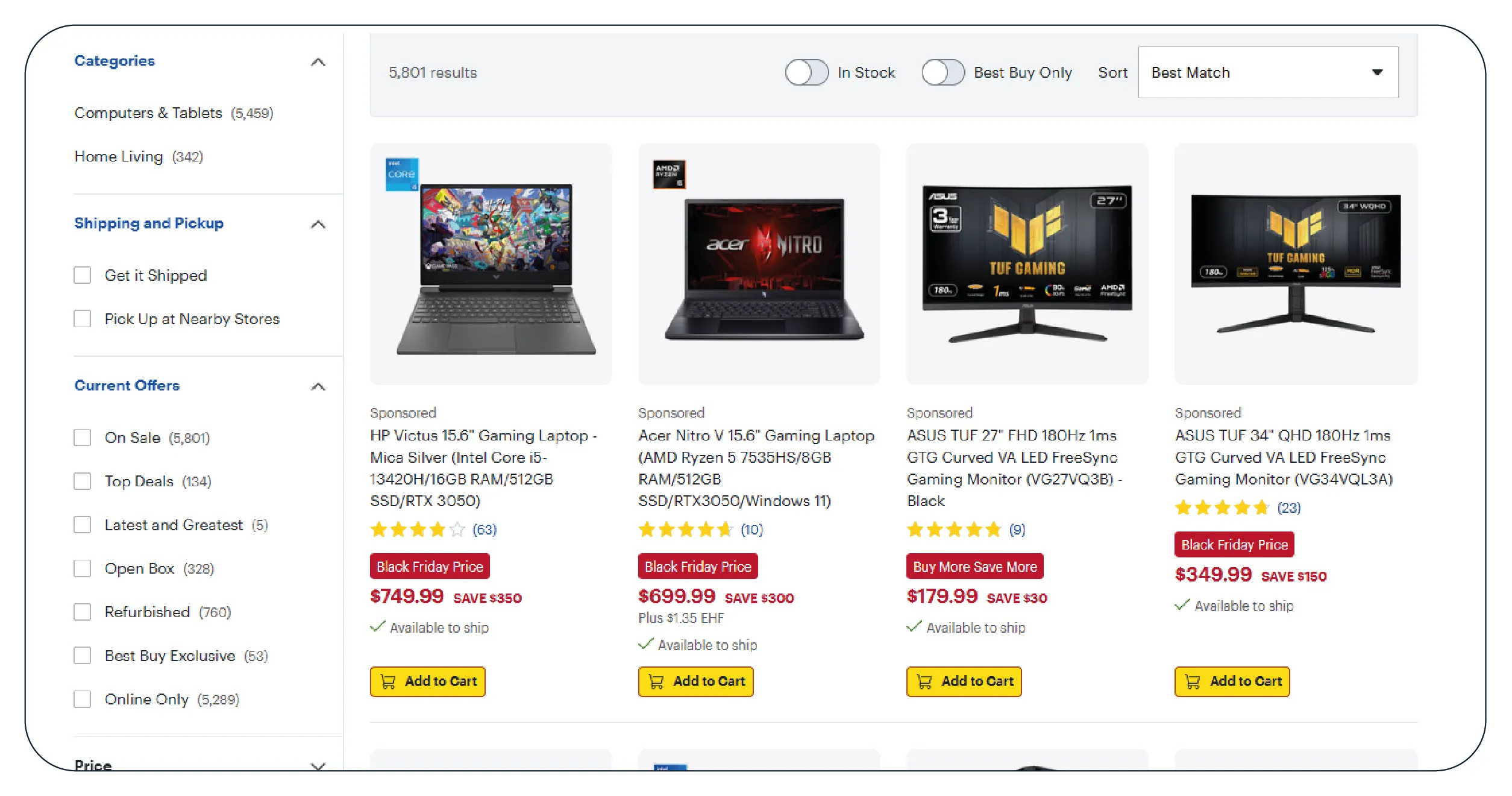

The client, a mid-sized electronics analytics company, approached us to improve how they

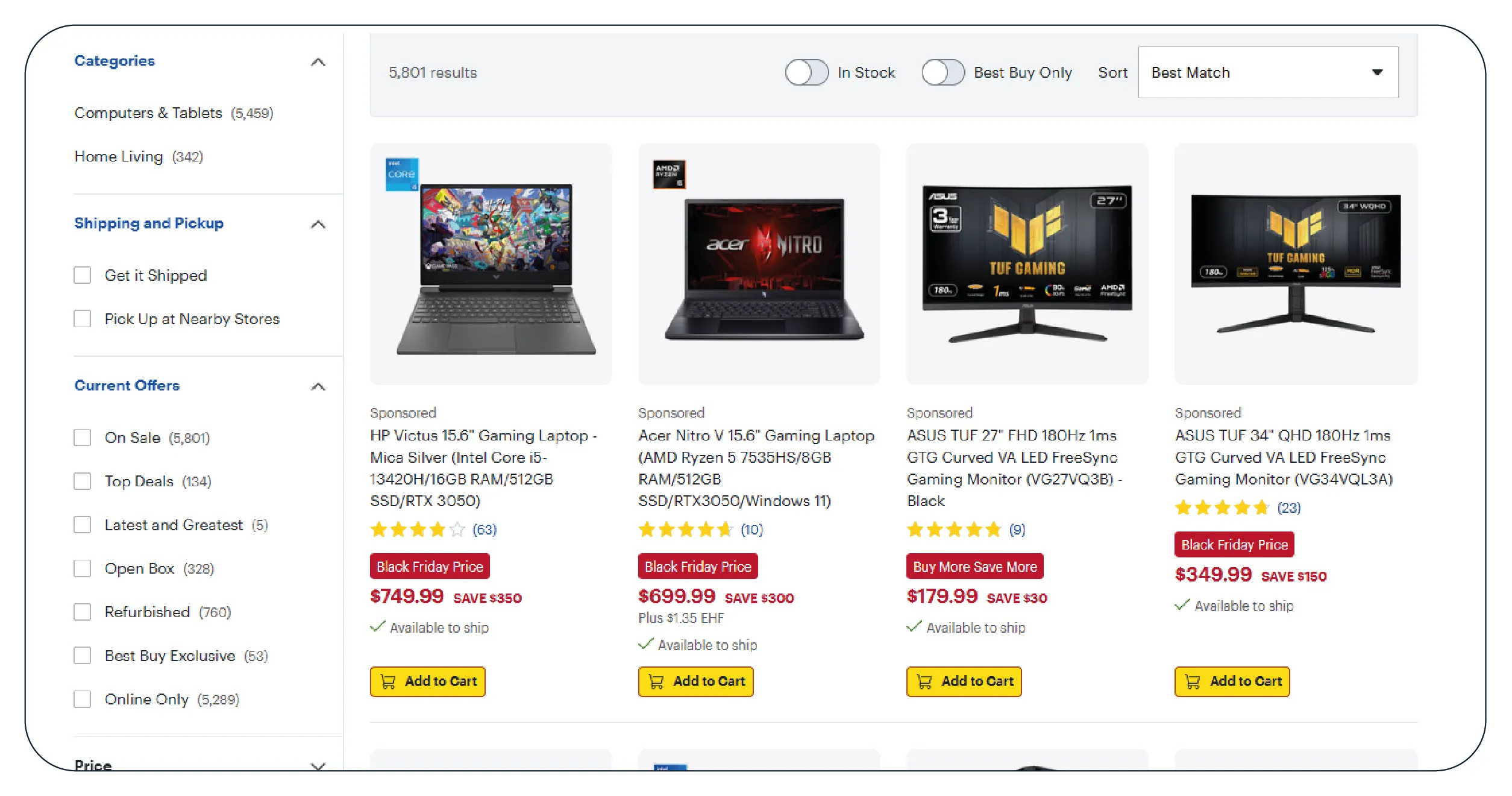

collected product data from large U.S. retailers. They needed a solution that could Scrape

BestBuy product pages in bulk with consistent accuracy and speed to fuel pricing and assortment

insights. Our team delivered a rapid data extraction system capable of handling massive volumes

while ensuring precise mapping, categorization, and quality assurance. Using advanced

automation, we also helped them Extract BestBuy.com E-Commerce Product Data daily for real-time

reporting. As a result, they achieved a 92% faster workflow, 99.4% accuracy, and fully automated

data delivery to their BI stack.

The Client

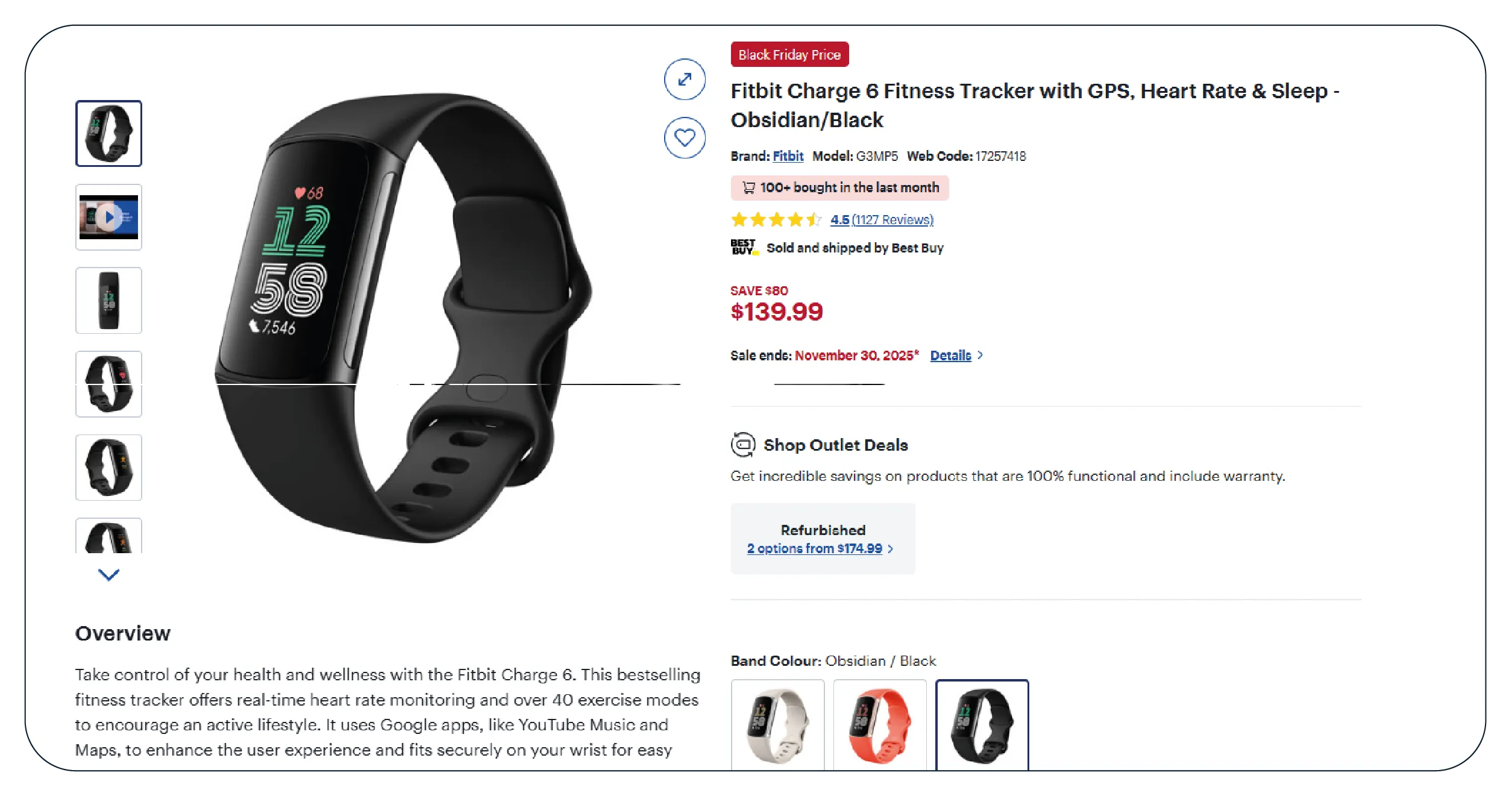

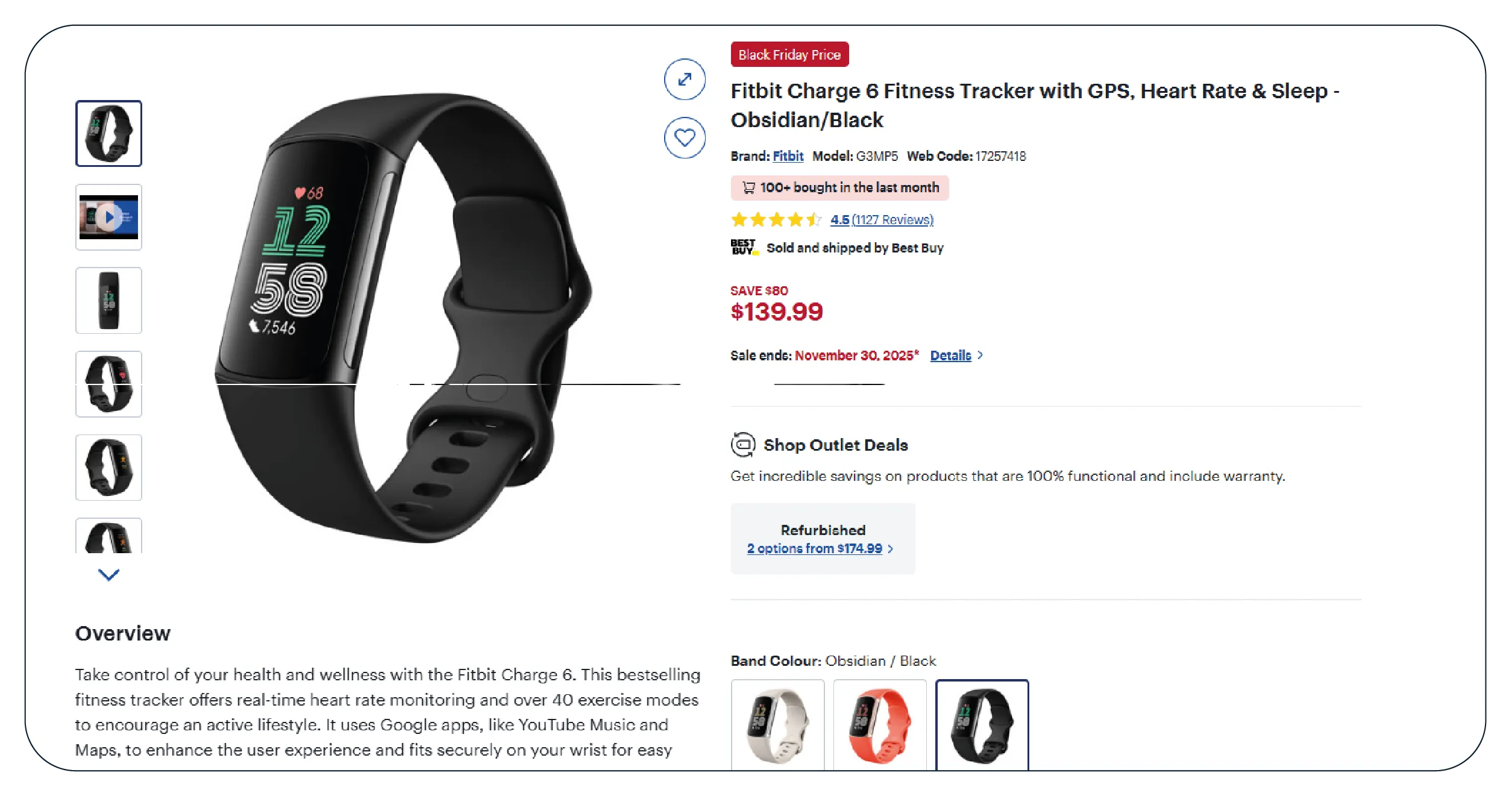

The client operates in the consumer electronics intelligence industry, where product pricing,

stock changes, and market movement shift rapidly. With online retail becoming increasingly

competitive, they needed deeper visibility into marketplace dynamics. Industry pressure was

rising as more brands relied on data-driven decision-making, and real-time insights became

essential for maintaining a competitive edge. Before partnering with us, the client used

multiple manual tools that were inconsistent, slow, and prone to errors. They wanted a system to

Scrape BestBuy website without coding so their analysts could focus on insights rather than

repetitive operational tasks. Their internal team struggled with scaling extraction jobs when

product counts increased, often causing delays that impacted decision-making across pricing,

supply planning, and promotional strategy. Additionally, they needed structured, clean datasets

that aligned with their analytics workflow. This transformation was vital to improve efficiency,

reduce manual work, and support their expanding data-driven services.

Goals & Objectives

To meet the client’s expectations, we established clear goals focused on performance,

automation, and long-term scalability. They wanted the best data scraper for BestBuy that could

adapt to dynamic page layouts and deliver reliable data at scale. We also aligned our solution

with their broader analytics roadmap and Pricing Intelligence Services.

Deliver fast, automated extraction of large product datasets

Scale seamlessly as catalog size grows

Improve accuracy, consistency, and refresh frequency

Build an end-to-end automated pipeline

Integrate output into BI tools

Enable real-time analysis of product, stock, and price

90%+ reduction in manual effort

99%+ field-level accuracy

3x faster update cycles

Zero downtime during peak extraction windows

The Core Challenge

Before implementation, the client faced major bottlenecks that hindered productivity. Their

previous tools frequently broke when website structures changed, causing unpredictable delays.

They needed stable BestBuy scraping for competitor tracking workflows to benchmark pricing

multiple times per day. Slow extraction speed created a backlog in reporting cycles, making

insights obsolete by the time they reached decision-makers. They also struggled to maintain

uniform taxonomy across thousands of products, weakening the quality of their Product Pricing

Strategies Service . Existing scraping methods lacked robust monitoring, retries, and validation

layers, resulting in inconsistent datasets and missing attributes. Additionally, their team was

overwhelmed by manually consolidating files, performing data cleaning, and re-running failed

tasks. They required a highly resilient solution that could run at scale while remaining fully

automated.

Our Solution

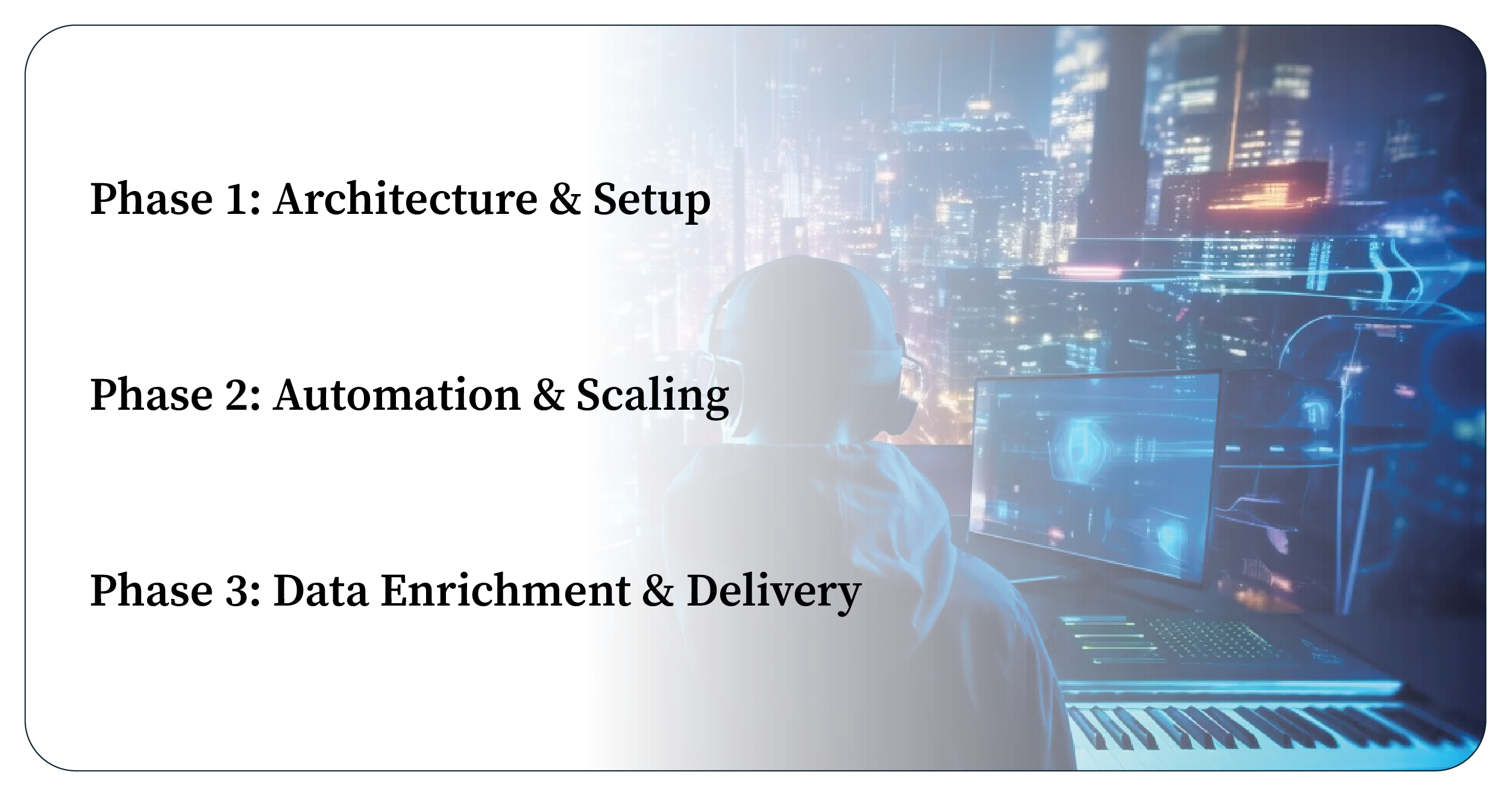

We deployed a phased extraction and automation solution designed for long-term scalability. At

the core, we integrated the best web scraping tools with our proprietary orchestration engine to

ensure stability, speed, and clean datasets. The project began with an assessment phase to map

all required data points, validate unique product identifiers, and understand the client's

internal data workflows. This ensured alignment between business needs and technical output.

Phase 1 – Architecture & Setup

We built a modular data pipeline capable of handling tens of thousands of URLs daily. This

pipeline relied on dynamic render handling, adaptive parsing templates, and structured

extraction logic. It allowed seamless updates whenever the website layout changed, eliminating

downtime.

Phase 2 – Automation & Scaling

Next, we deployed advanced scheduling and load-balancing components using our Web Data

Intelligence API , enabling real-time extraction with parallelized jobs. Automated retries,

anomaly detection, and validation rules ensured data consistency.

Phase 3 – Data Enrichment & Delivery

We applied categorization engines, attribute mapping, and normalization layers to ensure

datasets could be directly consumed by analytics teams. Cleaned data was exported to the

client’s BI tools in their preferred formats, fully automating daily workflows.

Each phase eliminated a major bottleneck—from reliability to scalability to usability—resulting

in a robust, high-volume data solution.

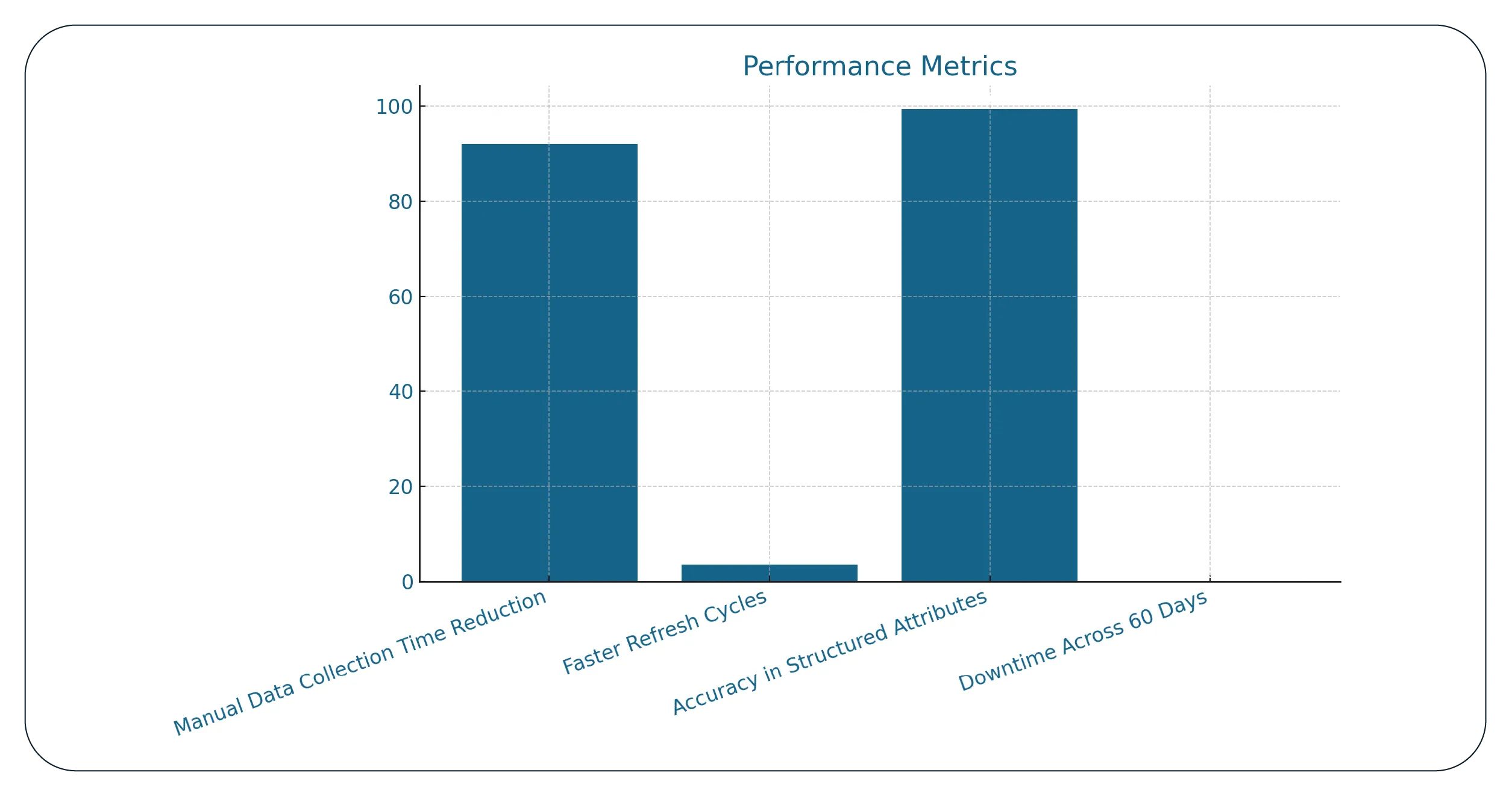

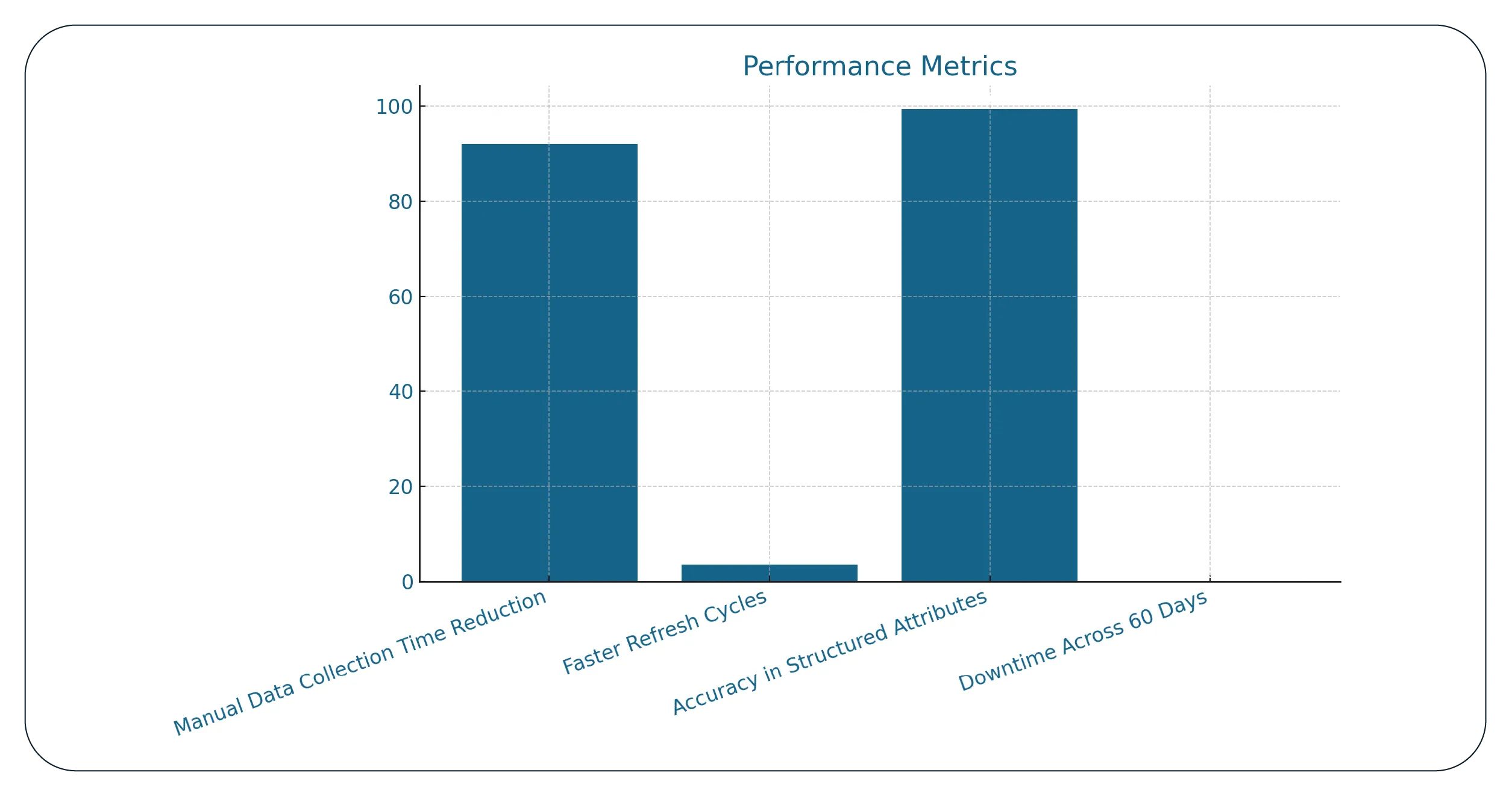

Results & Key Metrics

92% reduction in manual data collection time

3.5× faster refresh cycles for product listings

99.4% accuracy in structured attributes

0% downtime across 60-day monitoring period

Fully automated delivery to BI dashboards

System capable of handling 50,000+ URLs per run

Achieved stable performance for Scrape BestBuy without coding workflows

Results Narrative

The client successfully transitioned from fragmented manual processes to a fully automated,

scalable data pipeline. Their analytics team gained continuous access to accurate, real-time

product data, enabling faster decision-making and improved pricing and assortment strategies.

Reporting efficiency increased dramatically, allowing them to deliver insights to their clients

much sooner. The new system provided stability, speed, and high-volume capabilities that

exceeded their internal benchmarks.

What Made Product Data Scrape Different?

Our approach stood out because we combined automation, adaptability, and performance-driven

engineering. We utilized proprietary frameworks optimized for scale and precision, ensuring

uninterrupted operation even during structural website changes. Our smart quality checks,

enrichment layers, and metadata mapping provided additional value beyond mere extraction. These

capabilities enabled stronger BestBuy scraping for eCommerce insights, helping clients gain a

strategic edge in the electronics retail sector through highly reliable and analytics-ready

datasets.

Client’s Testimonial

“Partnering with this team transformed our analytics operations. We now receive high-quality

datasets daily without any manual intervention. Their expertise in handling large-scale

retail extraction allowed us to improve our pricing models and market benchmarking

significantly. The accuracy, structure, and reliability of the data have elevated our

internal workflows and client deliverables. This solution has become central to our

ecommerce data insights strategy.”

— Data Engineering Lead, Electronics Analytics Firm

Conclusion

This project demonstrates how structured automation can revolutionize digital retail data

operations. Our solution empowered the client with reliable extraction, rapid updates, and clean

datasets ready for analytics. We continue to enhance our capabilities to Scrape Data From Any

Ecommerce Websites , offering scalable infrastructure for future growth. By enabling the client

to Scrape BestBuy product pages in bulk accurately and efficiently, we set the foundation for

improved strategic decision-making, competitive intelligence, and long-term digital

transformation.

FAQs

1. Can you extract data from thousands of BestBuy URLs at

once?

Yes, our system is designed for high-volume extraction with parallel processing and dynamic load

management.

2. How do you ensure data accuracy during large-scale

scraping?

We use validation rules, schema checks, and anomaly detection to maintain accuracy across all

product attributes.

3. Does the solution work even if BestBuy changes its

layout?

Yes, our adaptive parsing system automatically updates templates, ensuring uninterrupted

extraction.

4. Can the extracted data integrate into BI tools?

Absolutely. We support CSV, JSON, Excel, API delivery, and direct integrations into BI

platforms.

5. Do you offer monitoring and automated retries?

Yes, every job includes monitoring, retries, and notifications to guarantee stable and complete

data delivery.

.webp)

.webp)

.webp)