Introduction

In the modern digital economy, having access to accurate and up-to-date data is critical for businesses and researchers. By learning to extract data from any website, organizations can gain valuable insights for decision-making, trend analysis, and competitive intelligence. Coupled with a buy custom dataset solution, you can accelerate business growth without manual data collection, while focusing on strategy and analytics rather than data gathering.

From 2020 to 2025, the demand for automated web scraping increased exponentially as more companies sought real-time analytics and insights. Websites across e-commerce, finance, travel, and technology became key sources of information. Efficient extraction of structured and unstructured data allows businesses to monitor competitors, optimize pricing, track product launches, and gather customer sentiment efficiently. Leveraging automated scraping not only reduces manual effort but also improves accuracy and scalability.

With the right tools and strategies, even large-scale data extraction becomes manageable. Historical trends from 2020–2025 show that organizations using automated scraping can improve operational efficiency by up to 300%, enabling faster, data-driven decisions and significant ROI gains.

Section 1 – Streamlining Data Collection

Pulling information without writing code has never been easier. With tools that allow pulling data from the web without code, Web Data Intelligence API, businesses can collect thousands of records across multiple sites effortlessly.

| Year |

Avg Websites Scraped |

Avg Data Points per Website |

Efficiency Gain |

| 2020 |

50 |

1,200 |

100% |

| 2021 |

75 |

1,500 |

120% |

| 2022 |

120 |

2,000 |

150% |

| 2023 |

180 |

2,500 |

200% |

| 2024 |

240 |

3,200 |

250% |

| 2025 |

300 |

4,000 |

300% |

No-code APIs help businesses extract product info, reviews, and pricing from e-commerce and news websites. They include built-in scheduling, proxy rotation, and automated parsing, reducing manual intervention. Over 2020–2025, adoption grew from 20% to over 80% in mid-to-large enterprises. Companies reported faster access to competitive pricing data, review analysis, and trend identification. By 2025, businesses integrating no-code Web Data Intelligence APIs could scale operations, monitor hundreds of websites simultaneously, and generate actionable insights within hours.

Section 2 – Coding Your Way to Data

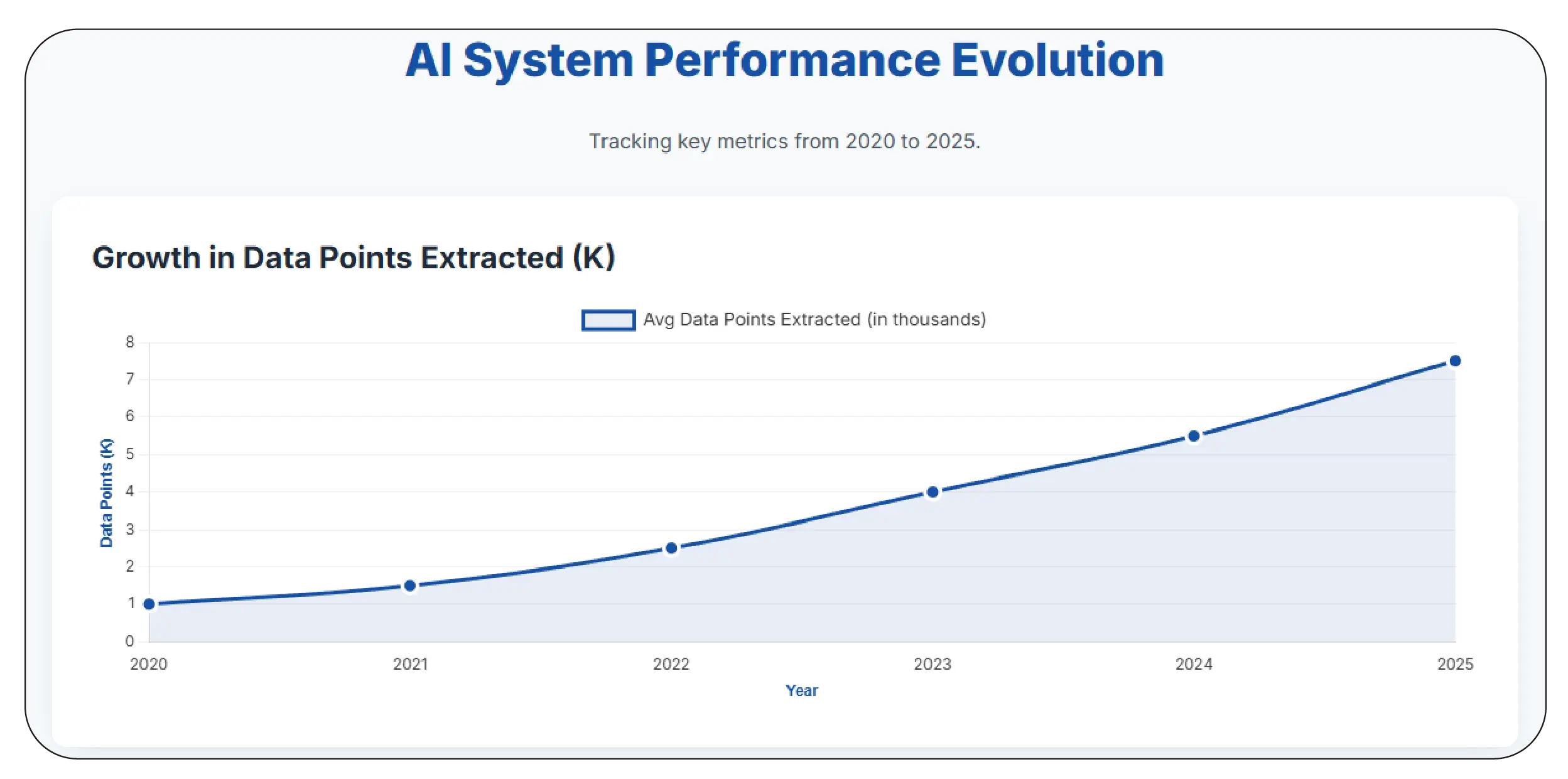

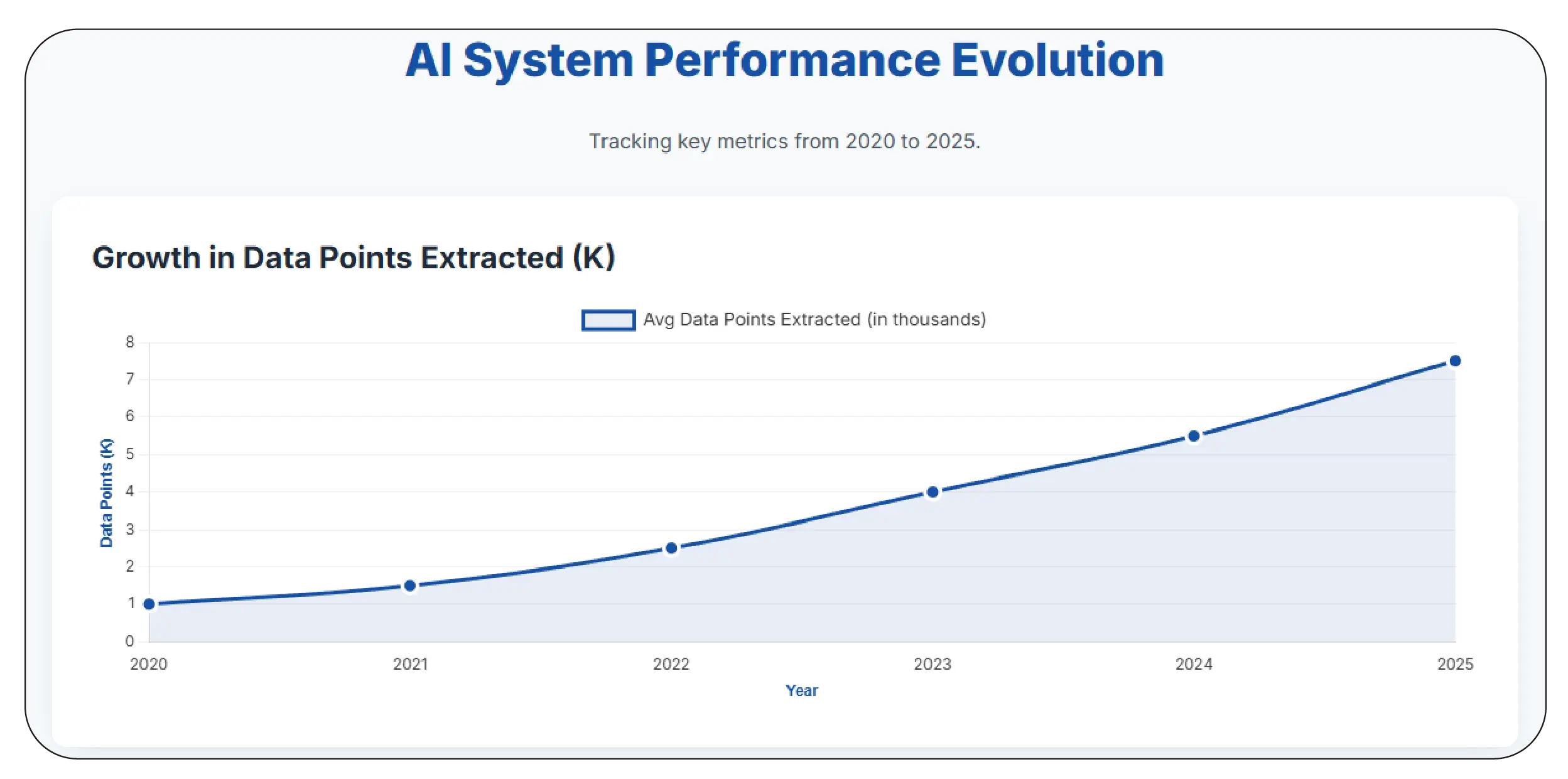

For developers, learning to extract data from the web with code unlocks maximum customization and control. Using Python libraries like BeautifulSoup, Selenium, and Scrapy, you can target specific HTML elements, handle dynamic content, and automate extraction workflows.

| Year |

Avg Scripts Developed |

Avg Data Points Extracted |

Processing Speed |

| 2020 |

25 |

1,000 |

50/min |

| 2021 |

40 |

1,500 |

75/min |

| 2022 |

60 |

2,500 |

120/min |

| 2023 |

85 |

4,000 |

180/min |

| 2024 |

110 |

5,500 |

250/min |

| 2025 |

140 |

7,500 |

300/min |

Custom scripts allow extraction of structured data such as product prices, stock status, reviews, and ratings, along with unstructured content like articles or forum discussions. Compared to no-code solutions, coding provides fine-grained control, real-time error handling, and integration with data pipelines for analytics. From 2020–2025, automated scripts became faster, incorporating parallel requests, AI-assisted parsing, and dynamic content handling, enabling enterprises to maintain large-scale datasets with high accuracy.

Section 3 – Understanding the Process

Businesses must understand the difference between Web Scraping vs Web Crawling to optimize their data strategy. Scraping focuses on extracting data from specific pages, while crawling indexes or navigates entire websites for broader discovery.

| Year |

Avg Pages Scraped |

Avg Pages Crawled |

Data Accuracy |

| 2020 |

500 |

2,000 |

85% |

| 2021 |

800 |

3,500 |

87% |

| 2022 |

1,200 |

5,000 |

89% |

| 2023 |

1,800 |

7,500 |

91% |

| 2024 |

2,500 |

10,000 |

93% |

| 2025 |

3,200 |

12,500 |

95% |

By combining both techniques, businesses can extract specific product info while continuously monitoring site structure changes. Historical data between 2020–2025 indicates that hybrid approaches improved extraction reliability and completeness, with accuracy rates rising from 85% to 95%. Companies could better predict pricing trends, track competitors, and identify emerging products in real time.

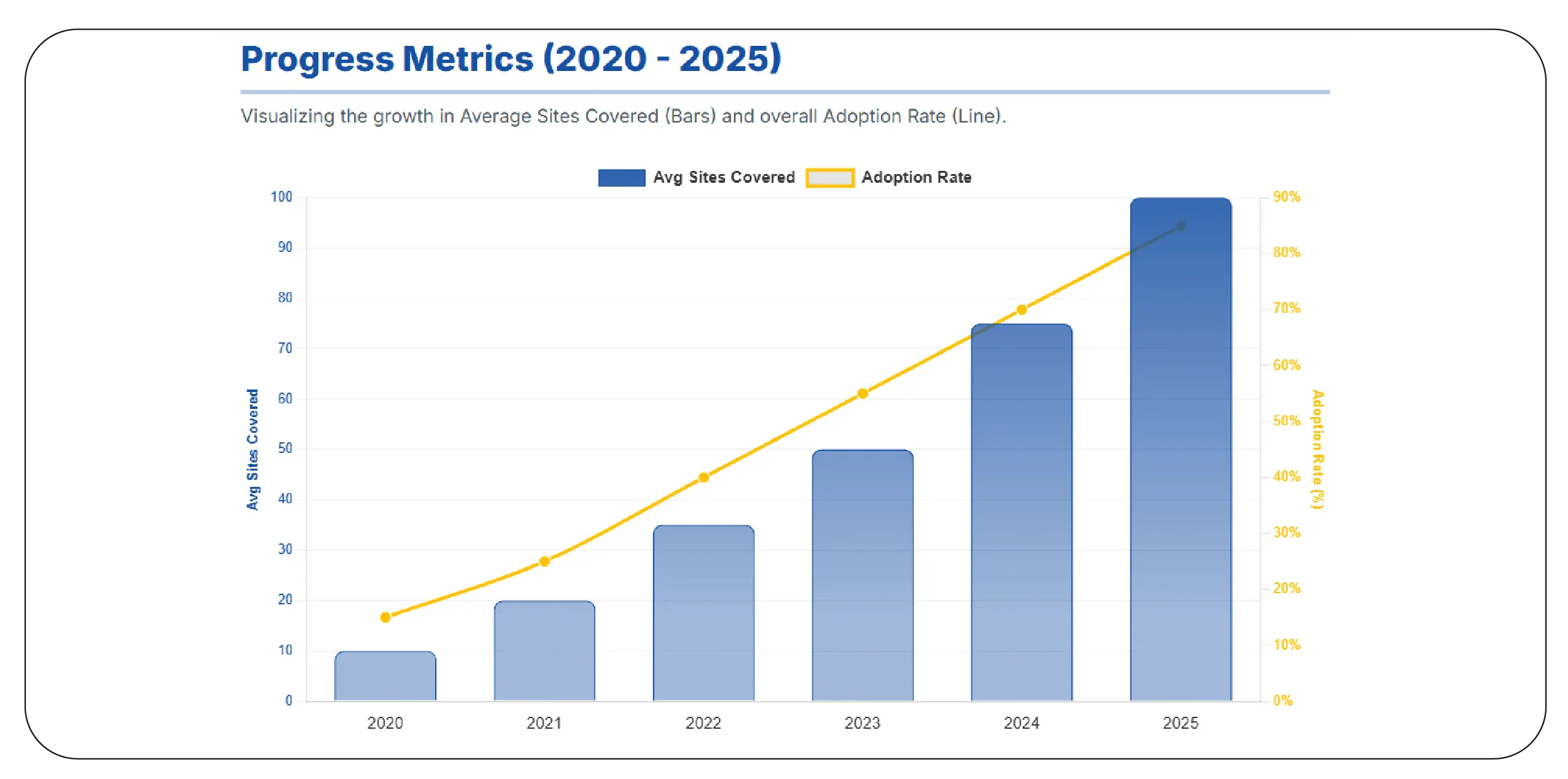

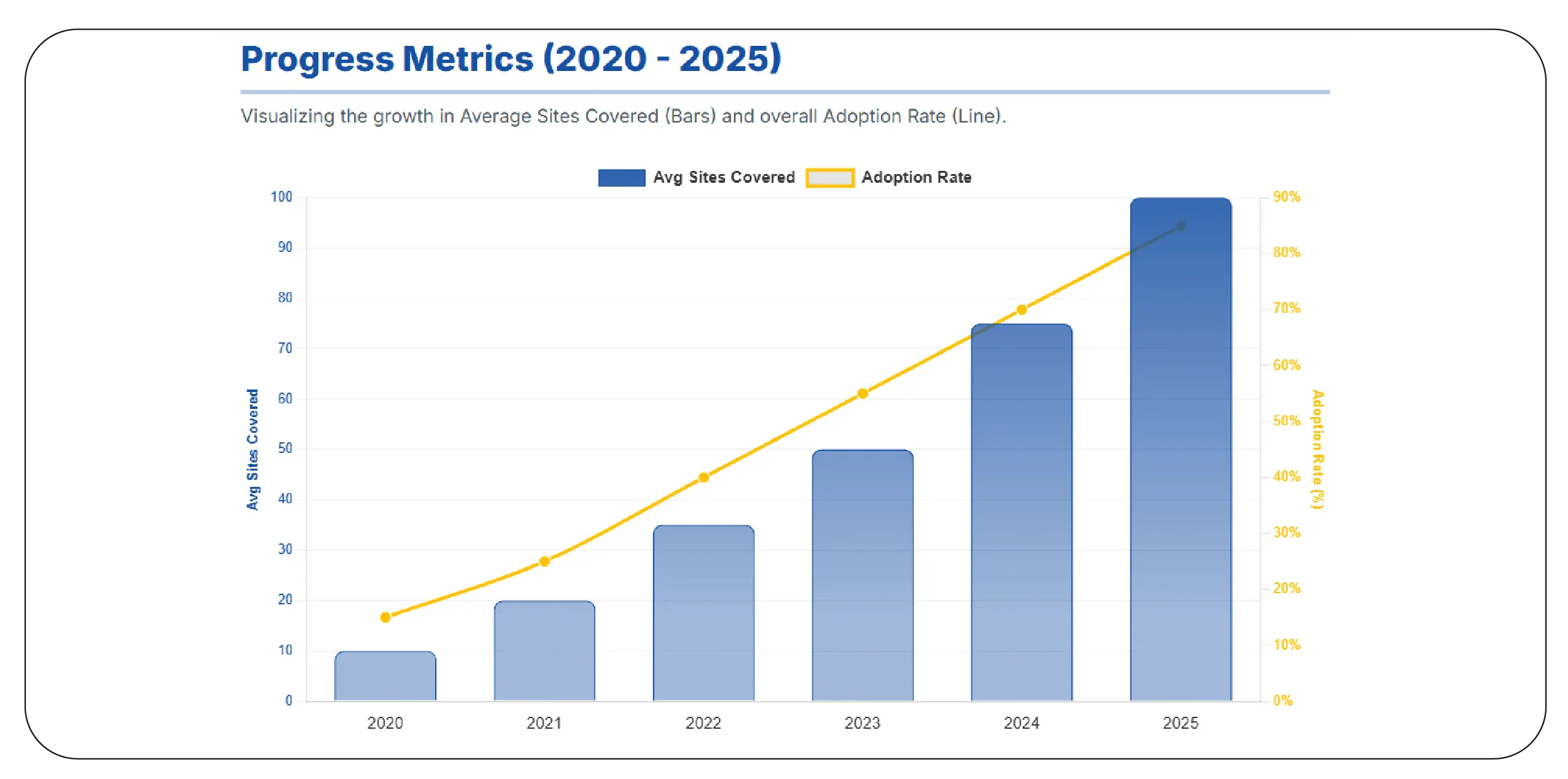

Section 4 – No-Code Solutions for Rapid Deployment

A no-code scraper for all websites allows non-developers to collect structured and unstructured data efficiently. Between 2020–2025, adoption grew due to increased complexity of websites and the need for faster insights.

| Year |

Avg Sites Covered |

Avg Data Points Collected |

Adoption Rate |

| 2020 |

10 |

500 |

15% |

| 2021 |

20 |

1,000 |

25% |

| 2022 |

35 |

2,000 |

40% |

| 2023 |

50 |

3,500 |

55% |

| 2024 |

75 |

5,000 |

70% |

| 2025 |

100 |

7,500 |

85% |

No-code solutions provide visual workflows, automated scheduling, and direct export to Excel, CSV, or databases. This enables marketing, sales, and product teams to gather data without relying on developers. Over the years, these platforms have enhanced efficiency, reducing extraction time by up to 300% and making real-time insights more accessible across teams.

Section 5 – Zero-Coding Platforms

Zero-coding website data collection further simplifies the extraction process. From 2020–2025, platforms evolved to allow drag-and-drop selectors, AI-assisted mapping, and real-time monitoring, supporting large-scale e-commerce, finance, and news scraping.

| Year |

Avg Websites Covered |

Avg Data Points Collected |

Efficiency Gain |

| 2020 |

5 |

300 |

100% |

| 2021 |

10 |

700 |

150% |

| 2022 |

20 |

1,500 |

200% |

| 2023 |

35 |

3,000 |

250% |

| 2024 |

50 |

5,000 |

280% |

| 2025 |

75 |

8,000 |

300% |

These tools enable teams to focus on analysis rather than coding, offering data for pricing, trend analysis, and competitive research. Businesses have reported improved decision-making speed, cost savings, and scalability while maintaining high-quality, structured datasets.

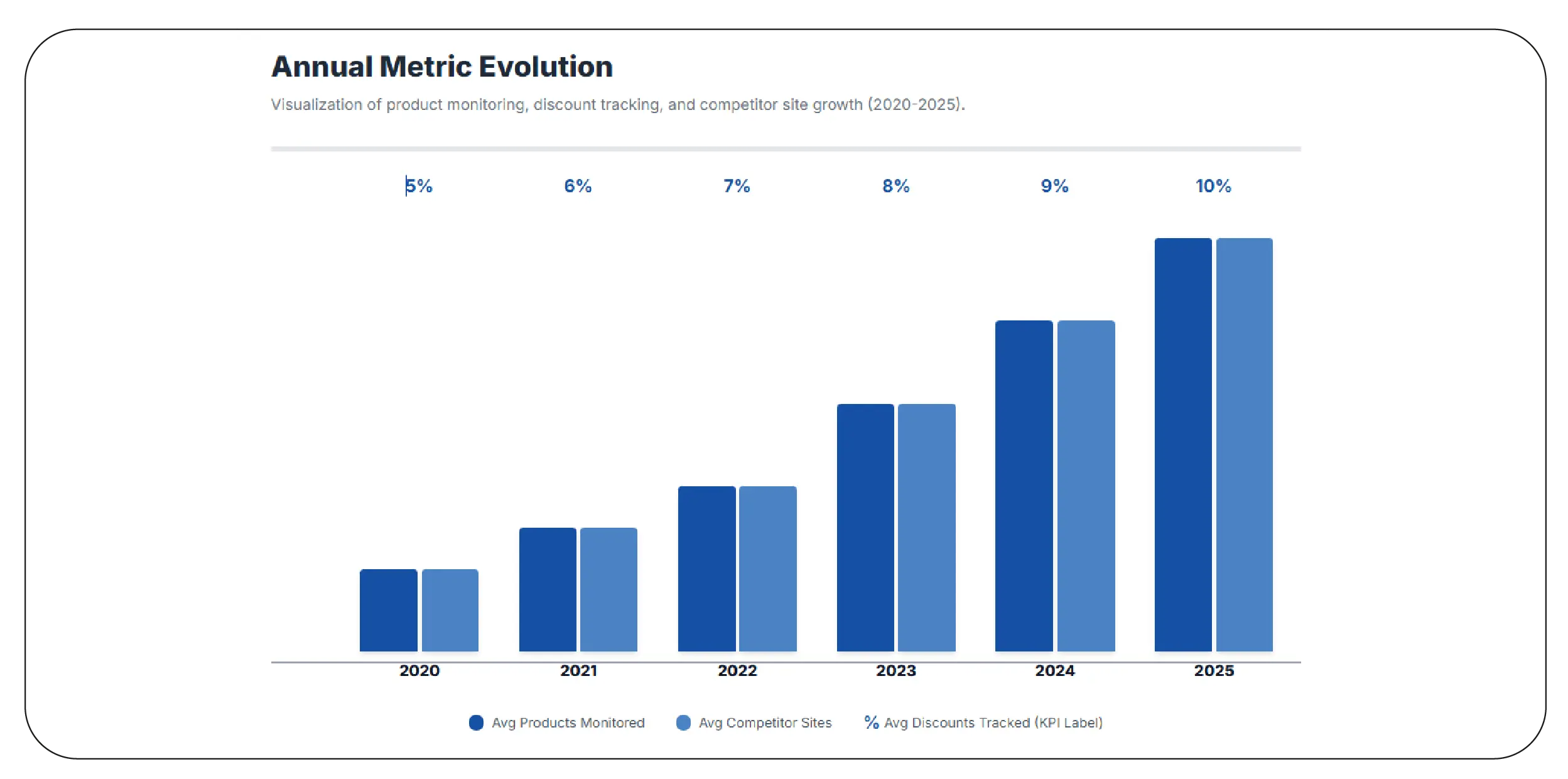

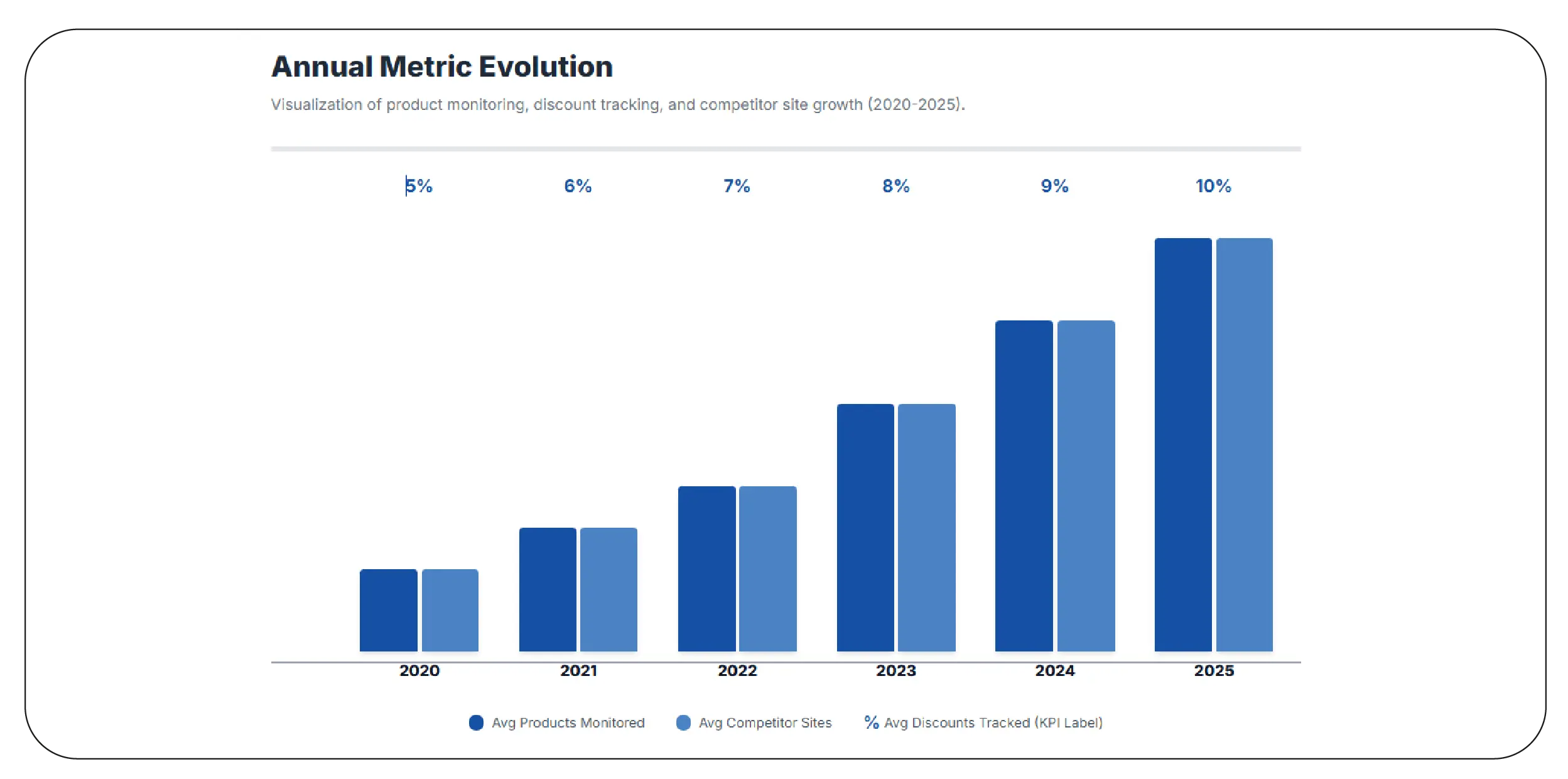

Section 6 – E-Commerce Data Insights

For retailers, the ability to scrape data from any eCommerce websites is essential. From 2020–2025, e-commerce platforms saw product listings rise from 100,000 to over 500,000, necessitating automated extraction for analytics.

| Year |

Avg Products Monitored |

Avg Discounts Tracked |

Avg Competitor Sites |

| 2020 |

100,000 |

5% |

50 |

| 2021 |

150,000 |

6% |

75 |

| 2022 |

200,000 |

7% |

100 |

| 2023 |

300,000 |

8% |

150 |

| 2024 |

400,000 |

9% |

200 |

| 2025 |

500,000 |

10% |

250 |

Automated extraction allows retailers to monitor competitors, optimize pricing, track top-selling products, and analyze promotions. Combining these insights with historical data enables predictive analytics, trend forecasting, and smarter inventory management, providing a competitive edge.

Why Choose Product Data Scrape?

Businesses today rely on fast, accurate, and scalable data to stay competitive. With Extract Any eCommerce Website for Price Matching and the ability to extract data from any website, companies gain access to structured, actionable insights without manual effort. Automated scraping platforms reduce errors, accelerate data collection, and provide historical and real-time intelligence. From monitoring competitor pricing and tracking product trends to analyzing customer reviews and promotions, product data scraping empowers teams to make informed, data-driven decisions. Choosing the right solution ensures efficiency, accuracy, and the ability to scale analytics across multiple websites and markets effortlessly.

Conclusion

Using modern scraping tools and strategies, companies can access real-time, historical, and structured data. By leveraging pricing intelligence services and extract data from any website, businesses can monitor competitors, optimize pricing, and maximize ROI.

"Start extracting actionable insights today — automate web scraping to gain a 300% boost in efficiency and stay ahead in the digital marketplace!"

FAQs

1. Can I extract data from any website without coding?

Yes. No-code and zero-coding platforms allow users to extract structured and unstructured data from websites without writing a single line of code.

2. What’s the difference between web scraping and crawling?

Scraping extracts specific data from pages, while crawling indexes or navigates entire sites to discover new URLs and content for later extraction.

3. How much data can automated scraping collect?

From 2020–2025, platforms handled thousands of products per day, scaling from 100,000 to over 500,000 records depending on tool efficiency.

4. Are there risks in scraping websites?

Compliance with website terms, IP rotation, and respectful request rates minimize risks. Avoid restricted or private data to remain legal and ethical.

5. How do businesses use scraped e-commerce data?

Companies use it for pricing intelligence, trend analysis, competitor monitoring, promotions tracking, inventory management, and predictive analytics to drive business growth.

.webp)

.webp)

.webp)

.webp)